Welcome

Welcome to Chuck Rush's work history page.

I spent most of 2012 and half of 2013 looking for a job. At that time I wished I had a way, other than a resume, to show folks what I have done over the years. I always thought a work experience web site would be a good idea. I was working full time, had 1 kid at home, my wife was working down near New York City, and I was looking for a job. At that time I had no experience doing web programming and time was at a premium, so the site was never created.

Well, now, the kids are gone, and I have a job in the same area where my wife works. I work at Persistent Systems LLC in New York city. It is a really good place to work and I enjoy going to work every day. I have no plans to work any other place. Now that the kids are gone I have time to do professional development. Since I have folks on my team who are web wizards, it seemed like learning some more web programming was a good place to start. Putting together a work experience web site, a long delayed project, was first on the schedule

I have had some cool, interesting jobs over the years and have worked with some really great people. Below is a summary of that work.

Quick Links

Resume Persistent Systems SRC InfiMed UCHSC - Visible Human Lab Akron Standard Firestone Tire Antarctica Solar PondResume

View resume (.pdf file)Persistent Systems - Ad Hoc Networks - Programming

I started at Persistent Systems July 8, 2013. The company employed less than 30 at that time. Now we have close to 200 employees. The company did over 80 million in sales in 2019. It has been quite a ride.

The company was started by Dr. David Holmer, our C.T.O. and Dr. Herb Rubens, our C.E.O. These two guys are way, way, way smarter than the average bear. They have shepherded the company from two guys to its present size without taking one cent of venture capital money. What is impressive to me is they know how to let go as the company grows. They have hired a C.F.O., shipping and material managers, a seasoned H.R. professional and whatever else managerial and technical expertise needed to meet the needs of a growing company. I always feel like a suck up when I say it, but I have 100% faith in the leadership of this company.

We all know that a company must make money to thrive and grow. Our leadership knows that too. But what I find really cool is that the leadership really and truly wants to design, manufacture, and sell a product that they truly believe our customers need. This applies to all our customers but even more so to our military customers. Our leadership and all our employees truly care and want to equip our armed forces with equipment that will enable them to do their jobs in the most effective way.

Folks ask me "What does your product do." Internally, I always smile and think "It kicks ass!" but then I say "Have you seen the Terminator movie battle scenes with the swarms of drones, robots, and ground vehicles? If SkyNet was real, they would use Wave Relay® products for their communications. Pop one of our embedded boards in every robot and BAM, you have a self forming, self healing network."

I don't want to talk too much about what our product does or how it is used. I'd prefer the bad guys find that out first hand. But I will say it is a first class product, designed, built, maintained, and supported by a highly dedicated group of professionals.

SRC Inc. - Electronic Warfare & Air Surveillance Radar - Programming and Project Lead

Birth of the LCMR (Light Counter Mortar Radar) - Way Cool!

I did not work on the LCMR while I was at Syracuse Research Corporation. However, it was one of the things that made me proud to work there. This article about the birth of the LCMR is one of the coolest things you will ever read.Electronic Warfare Receiver - Software Lead

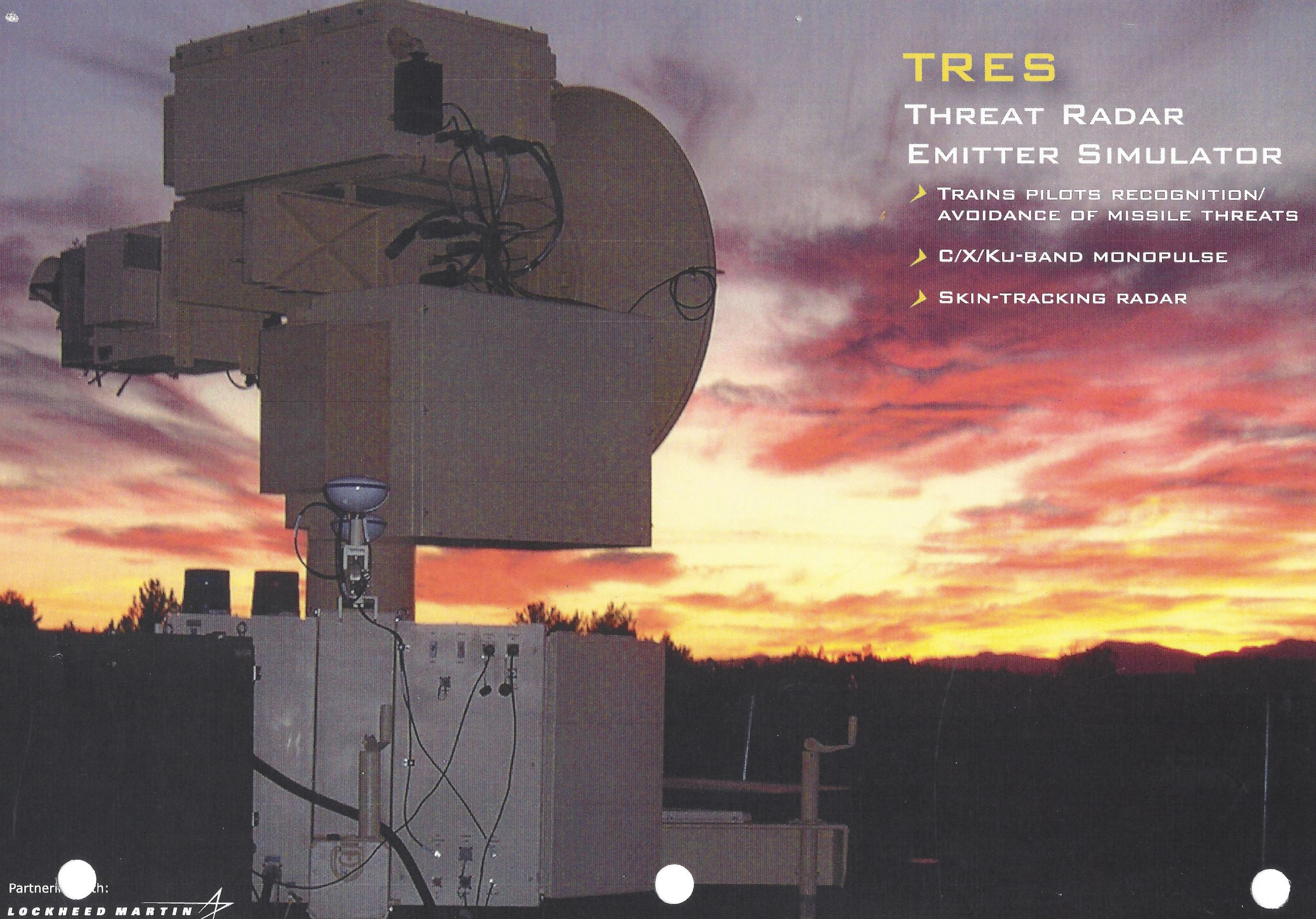

Thinking back on this project always makes me smile. At SRC Bob Iodice was our Program Manager. Mike Addario and Jason Steeger were our hardware/FPGA guys. Jeff Fontanella and Jeff Mahon were our systems guys. It was a great crew. The program was called TRES, Threat Radar Emitter Simulator. SRC designed and built the the threat receiver for The Lockheed Martin crew out of Ridgecrest, California. The TRES guys were great too, Dave Tesdall was software lead, Frank Argain was their software guy. Bill Kinney and Gary Johnson were their systems guys. They were and still are, a great bunch.The TRES systems are used on aircraft training ranges to simulate enemy weapon systems. An aircrew flies a simulated mission and the TRES system locks on as a simulated enemy weapon system. The threat receiver monitors the signals emitted by the radar and the signals emitted by the aircraft when the flight crew employs counter measures. The receiver generates reports of flight crew response and the overall encounter. This information is used in post mission analysis.

By volume, the threat receiver was a small part of the system. It received and analyzed radar signals. Look for the small brown box on the far left in the middle picture.

This was a challenging project. The TRES system is used to model many different threats. It simulated a 360 degree surveillance radar used to monitor a large air space. When a target was detected it would hand off the contact to a monopulse tracking TRES variant that simulated a missile system. There was also a shore based radar that pointed in a particular direction and then rotated left to right over a set number of degrees.

One of my jobs was to modify the LCMR tracker to work with all three TRES variants, 360 degree surveillance, a monopulse tracking system, and a shore based ship detection radar. The software was configurable and there was one code base for all the TRES variants.

Flying an aircraft to test a radar system is expensive and logistically difficult. To test the tracker I needed a way to simulate flight paths. It turns out there is an SDK for Microsoft Flight Simulator that allows you to get aircraft position, speed, pitch, roll, yaw and many other flight parameters. I wrote some code to grab the aircraft data and turn it in to radar detection data. Then I would play the data back and feed the detection data to the receiver and track my simulated flight. This came in handy for testing. I was happy when other projects used the code too. Never did get the hang of landing the aircraft though.

Before I worked on TRES I worked on a similar system for a different customer. It was a classic contract where the requirements were created up front. We implemented to the requirements and delivered the system. The only problem was. although we met the requirements, the system did not really meet the customers needs.

For the TRES project we had weekly telephone calls withe the customer to discuss program progress and technical requirements. At the end of the project the customer got exactly what they wanted and the systems worked extremely well. In addition, as the weeks went by we all got to know each other. Over time, real trust developed between us. Yes we were a vendor supplying a customer but we also became friends. I still occasionally call Frank Argain to chat. This project was one of the high points of my career.

Chem-Bio Integration Project - Software Lead

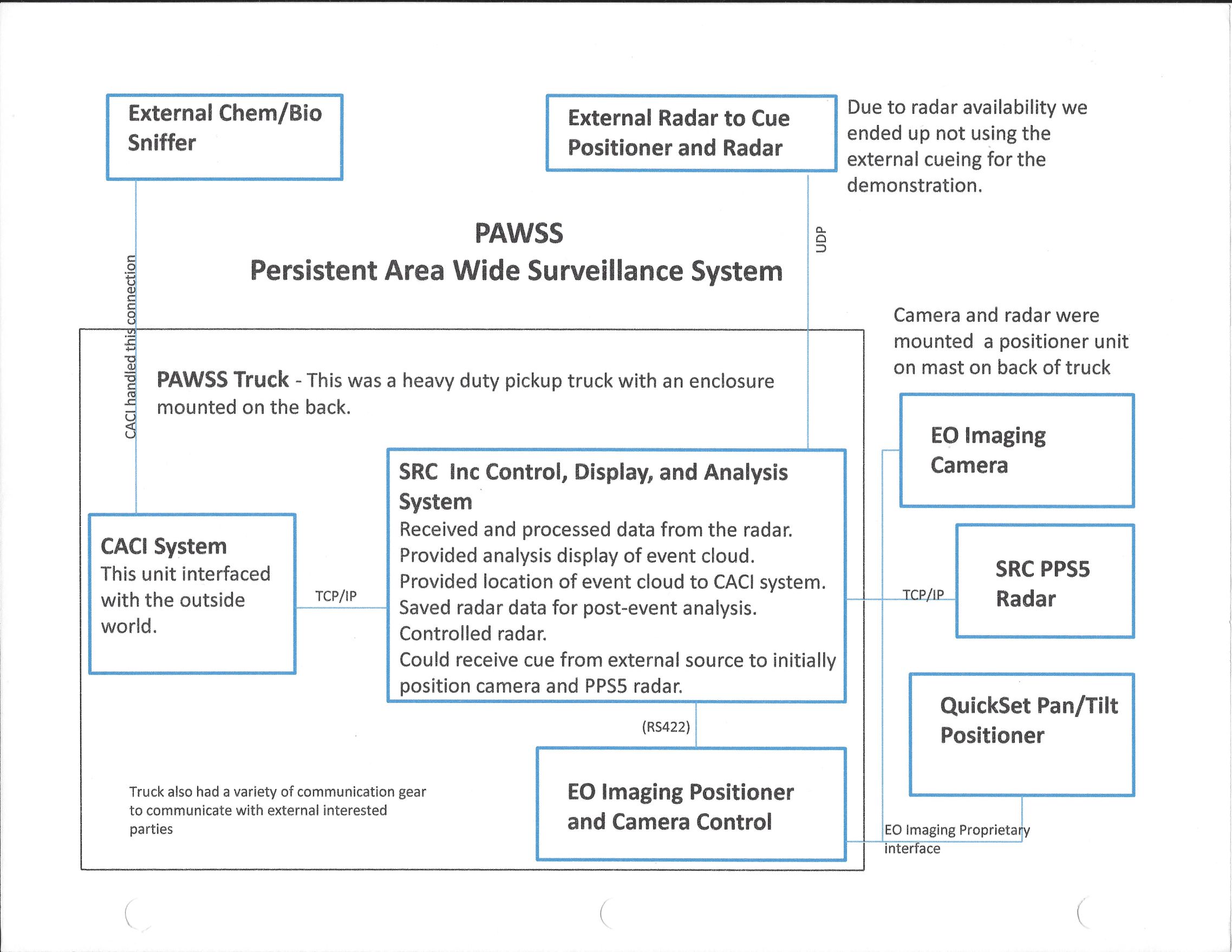

I don't have any super cool pictures of this project. The SRC part of this project was a radar that could detect gas clouds. The original concept was for the range to fire off an artillery shell with a simulated chemical agent. Then an LCMR would detect the shell and tell the PAWSS radar where to point. It also told a chemical sensor from another vendor to point at the cloud. The PC hooked to the radar would gather data and pass it off to an analysis package that determined the composition, size, density, etc of the gas cloud. For the demo, there were no LCMRs available so we just pointed the radar to where we knew the shell was going to detonate.

Another demo involved detecting and analyzing simulated chemical agents dispersed from an airplane. An external radar, which was not available at the time, was supposed to cue our system. We ended up relying on our eagle eyed systems engineer, Jeff Fontanella. Without fail he was always the first one to spot the plane before any of the rest of us.My role in the project was twofold.

- Create communications ICD for all the vendors systems in the PAWSS truck. This involved negotiating the message formats and getting them approved by the team.

- Do all the programming for pointing the radar, acquiring the radar data, sending it to analysis system.

Here is a government document that mentions the PAWSS project. Click here

Here is a government document that mentions the PAWSS project. Click here

LSTAR® Air Surveillance Radar - Software Lead

I had the privilege of serving as the lead software engineer for the SRC Inc LSTAR® radar. The LSTAR®, at that time, came in two flavors, V2 and V3. The V2 version fits in two large cases and can be transported in the back of an SUV. The V3 comes in more cases than can fit in an SUV. It is a 360 degree air surveillance radar that excels at seeing and tracking low and slow flying objects.

Before I say any more about my work on LSTAR® I want to say that everything described below was a team effort. We had great software, hardware, and systems folks on this project. In addition we had great leadership from the PM level and up. SRC Inc is a well run company with really good engineers. I also don't want to forget the non-engineering folks who keep the company running, the product shipping, the equipment working, and all the other things that keep a company running smoothly.

LSTAR® was a young product when I took over as lead software engineer. It had been designed, built, and installed at some initial locations and was working well. It was time to grow the product.

At the same time drones were coming into their own and recognized as a threat. SRC started to think about integrating various products they manufactured in to a system of systems to fill the anti-drone role. We took the LSTAR® various demo and test events related to anti-drone functionality.

The LSTAR® radar was used by the UK as one component of their strategy to ensure the safety of the 2012 Summer Olympics. It was pretty cool that a radar I was responsible for was used at an event of that size and importance. I, in coordination with our on-site guy, coded up a performance improvement and deployed it during the Olympics. It worked. Was a bit nerve wracking though.

The UK folks also used LSTAR® at the G8 Summit in 2013.

Learn more about LSTAR® on the SRC Inc LSTAR® page.

After I left development continued on using LSTAR® in anti-drone systems. This is one of the many cool articles you can find on the web about this subject.

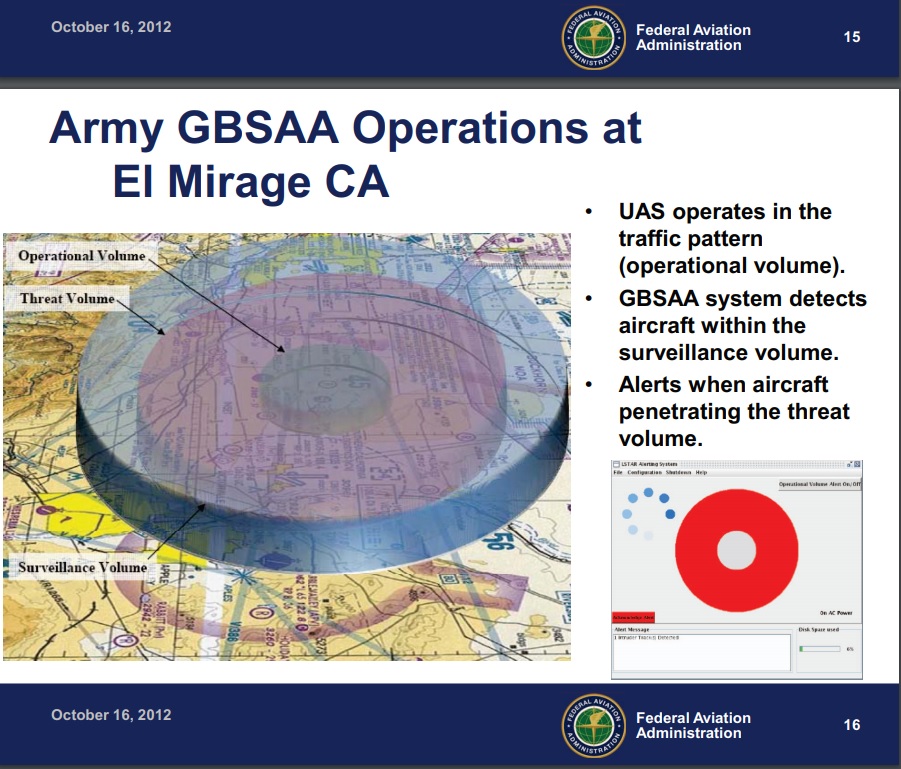

LSTAR® First in the Nation Unattended Night Flight of UAS in National Air Space - Software Lead

This project was ramping up when I transitioned from TRES Software Lead to LSTAR Software Lead. At that time, if you wanted to fly a drone in the national air space you had to either have eyes on the drone while in flight, which limits the operational range, or employ a chase plane to follow the drone around while it is flying. Neither option is viable at night time.

This system employed LSTAR® radars to monitor a volume of air apace around where drone flight operations were done. An SRC written application monitored the output of the radars and alerted the drone pilot when an aircraft was approaching.

This prototype project was a success, we did fly unattended at night. But, there is always a but in the engineering world, on the second night a test application that was used after operations to test that the system was operational failed. The system software was fine, the test software malfunctioned. The team found the bug within 24 hours. It was simple, safe, non-complex fix. Within 48 hours we had generated a 57 page test report showing the fix worked. Nevertheless, the program was shut down. It still hurts.

A follow on project was started using the FAA DO-178 software development process. I Was still LSTAR® lead but was not on the GBSAA project. SRC Inc, working with the Army and its contractors, developed and deployed multiple GBSAA systems. In November of 2018 I corresponded with the Program Lead from the El Mirage Effort. He stated "...we now have 'Block 1 GBSAA' systems fielded at 5 Army sites: Fort Hood, Fort Campbell, Fort Bragg, Fort Campbell, and Fort Stewart. The system is working very well and the soldiers love it - we now have supported over 1150 flights and nearly 4000 hours of UAS flights in the NAS."

Here is an example of how the LSTAR® is used for air space management in real life.

InfiMed Inc - Digital X-Ray - Device Driver and Embedded Programming

I worked on three major systems while at InfiMed.

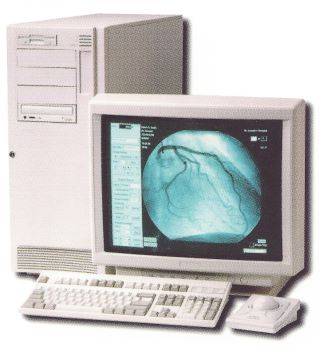

GoldOne

GoldOne was a general radiology/fluoroscopic imaging system. In the old days the x-rays would pass through your body and hit the x-ray film. Then the film would be developed, put on a light box where a radiologist would read the film. The GoldOne system replaced the film cartridge with a CCD camera. The data is digitally captured and processed. This resulted in a much lower dose to the patient and a more convenient work flow.

Infimed's product before GoldOne was getting old and sales were lagging. It was important to get GoldOne done as quickly as possible. The team worked 6.5 days a week for about 18 months. It was grueling. Fortunately, the team we had was a fantastic group of engineers. The fun thing about doing the device drivers was developing the code in parallel with the hardware development. Working with a hardware guy to bring up a brand new board was really fun.

I was hired to write Windows 95 device drivers for a set of custom designed PCI cards. There was a RAID card, a memory card, a display card, a scan converter card, and an x-ray film printer card. My device driver experience before this job consisted of copying code from a magazine for a Windows NT port driver. It was a risk hiring me. Turned out OK though. Rather than use the windows DDK, we bought a C++ based windows device driver package. At that time there was very little published information on windows device drivers. I found some articles and a book by Walter Oney that saved the day. Thanks Walter!

The hardware clocked in new registers every 33 milliseconds. The state machines to sequence data through the system were implemented in interrupt driven state machines. Windows 95 was not a real time operating system but through careful design and implementation, the system ended up working really well.

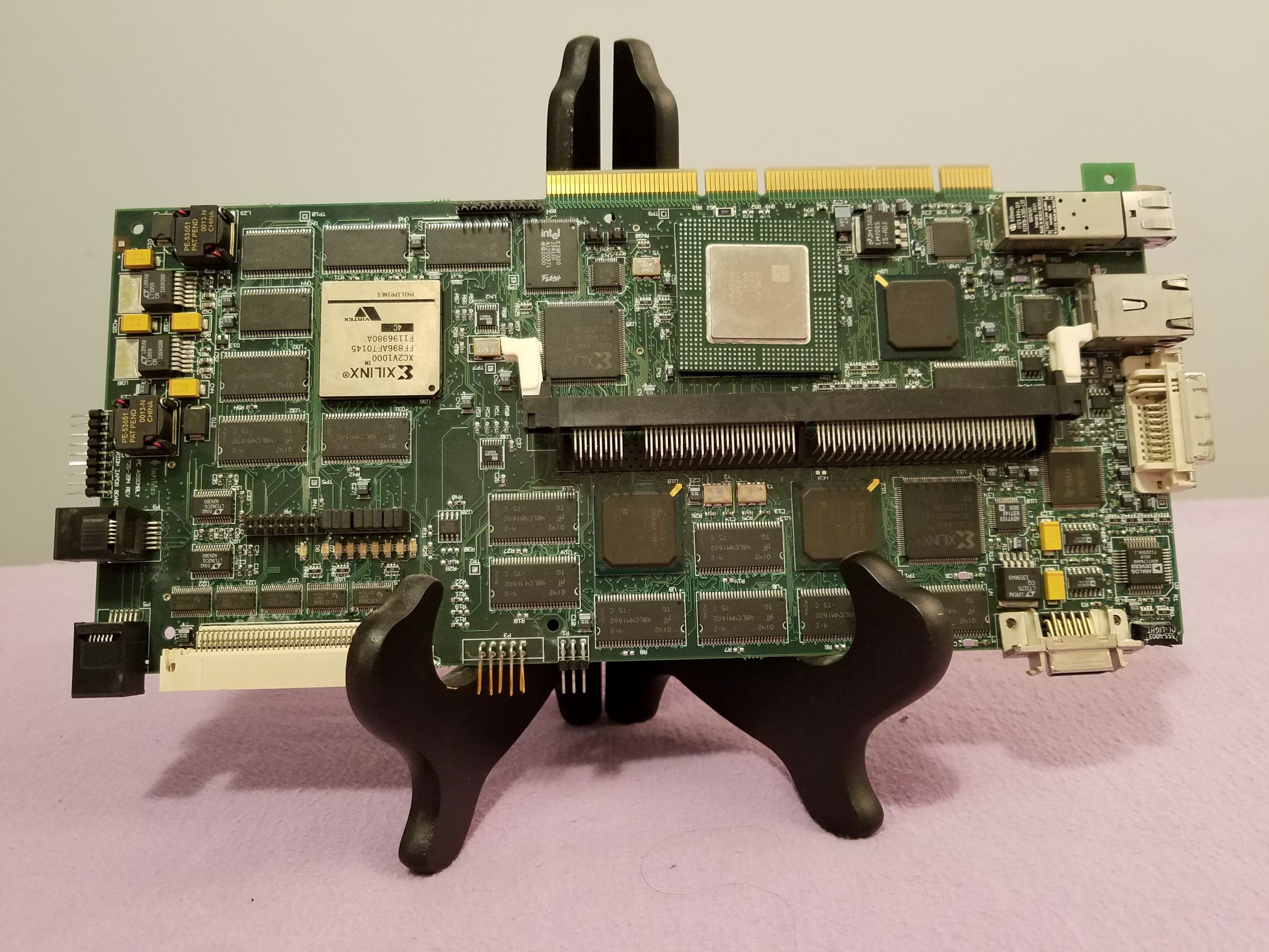

PlatinumOne and PlatinumOne Cardiac

PlatinumOne was the next generation product after GoldOne. It was a single board hardware solution. It implemented the same functionality as the multiple board GoldOne solution.

PlatinumOne Cardiac was add on functionality to the PlatinumOne. This was a high stress project. The system is used while a radiologist is guiding a catheter from someones thigh up and in to their heart. It has to work reliably and well, every single time. While developing this product the team was told 'Take as long as you need. We will ship it when it is done and working well' As an engineer, you don't normally hear that from management.

I worked on the PCI based Image Acquisition, Processing, and Display Board (IAPDB) from conception, initial board programming, and development through production. The board had an INTEL 80303 chip on it. The software on the chip controlled four Xilinx based PCI devices hung on a secondary PCI bus on the card. The four Xilinx devices interfaced with a video image bus connected to an InfiMed designed 1KX1K CCD camera. Each of the Xilinx PCI devices had memory regions that mapped to control and status registers on the device. Control values, image data, and video overlay data was written to the processing chain every 33 milliseconds. 30 fps image data was transferred off the image bus by the Xilinx based PCI device and then transferred using DMA to onboard memory on the IAPDB. From there image transfer and management code on the 80303 chip transferred the image data to PC memory and eventually to an onboard disk subsystem. I designed, implemented, and tested the majority of the code on the IAPDB. I also wrote the W2K driver the Windows application level used to communicate with the IAPDB. I thoroughly enjoy working at the intersection of the hardware/software boundary. It is challenging, rewarding, and a whole lot of fun.

Trixel Flat Panel Integration

This was a research and development effort. It did not go to production during my time at InfiMed. It was an opportunity for me to do some image processing work. The panels were not perfect. There were spots on the sensor where pixels of groups of pixels were dead. I wrote code to detect the dead pixels and correct the errors. I also wrote the low level interface code to communicate with the sensor.

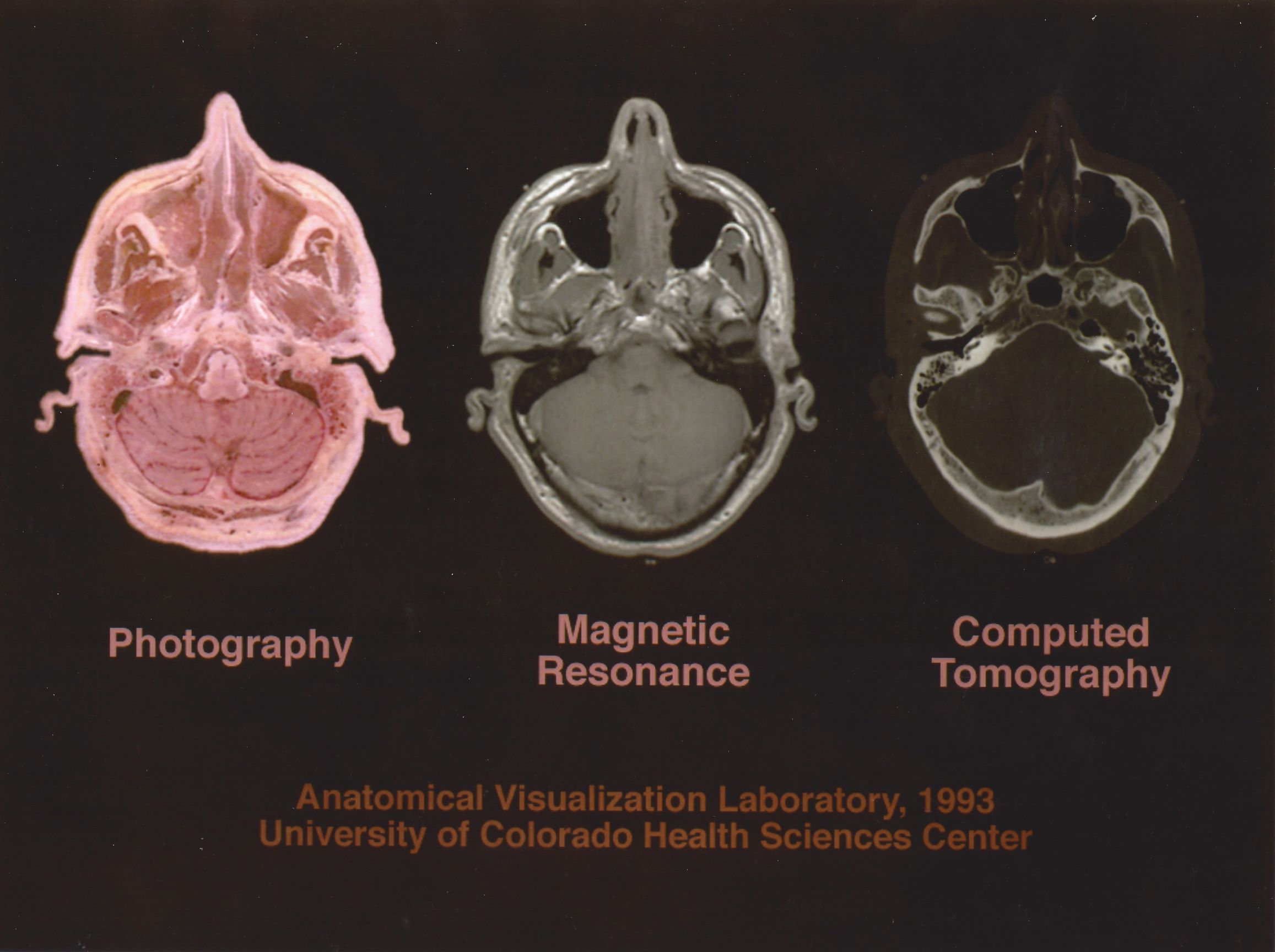

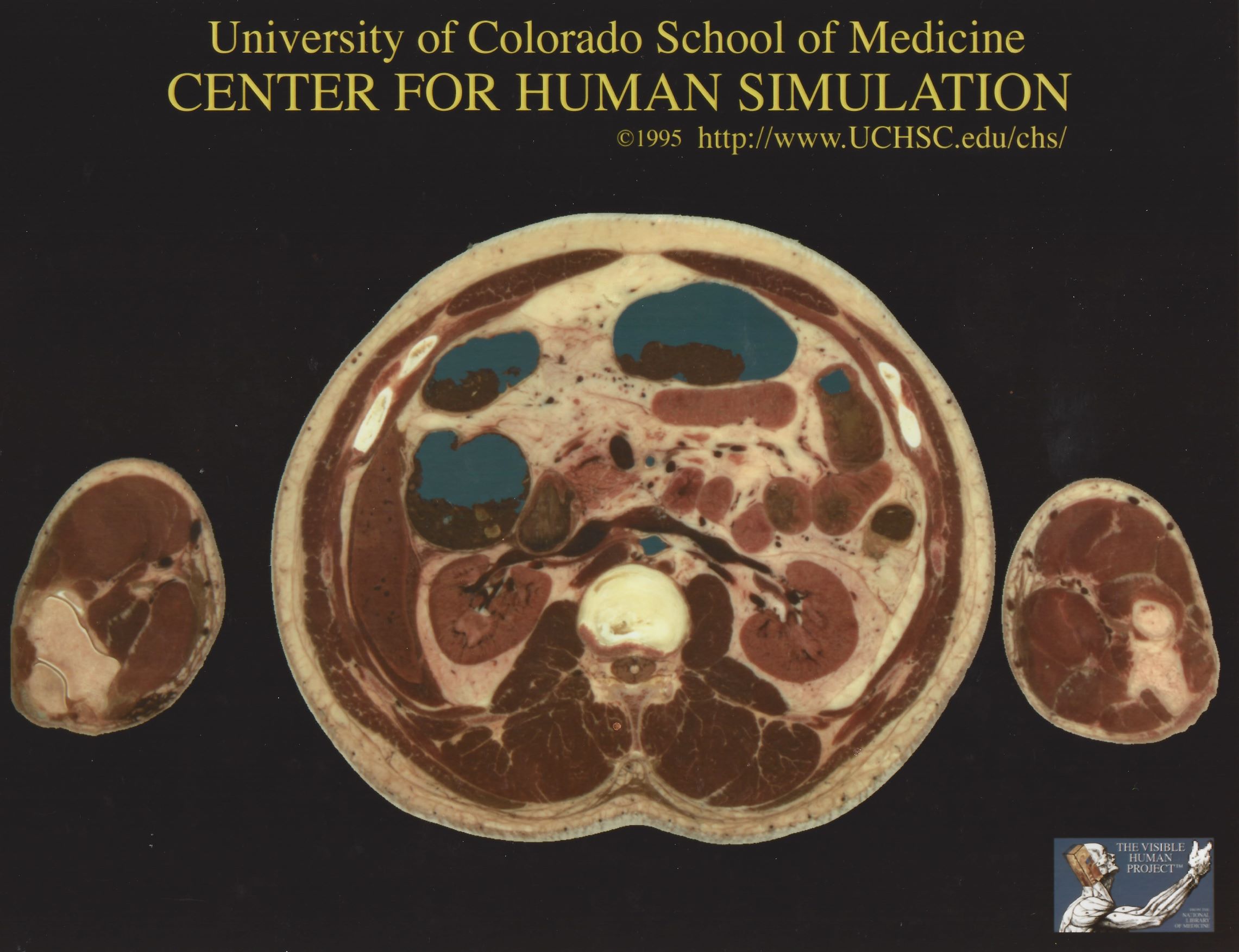

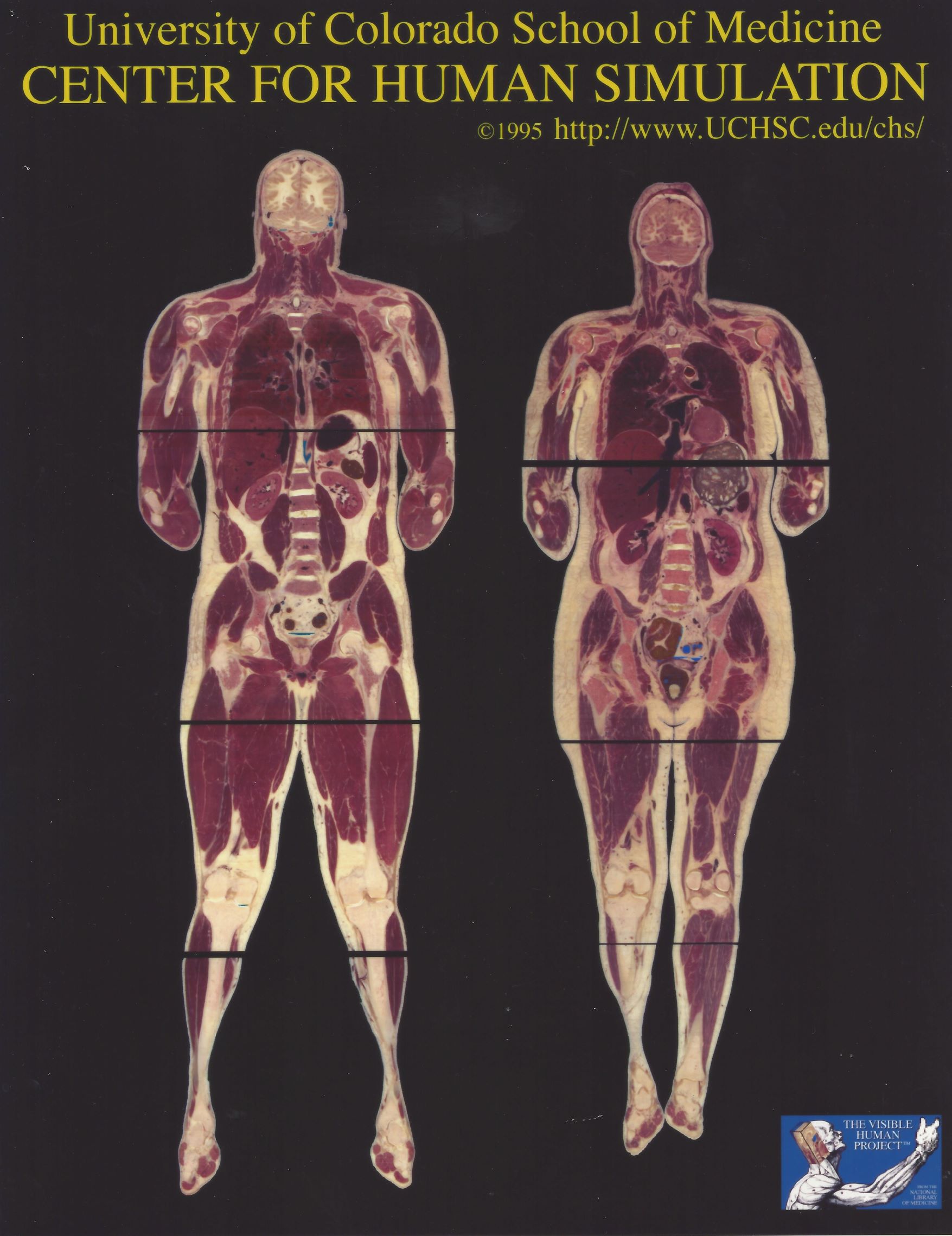

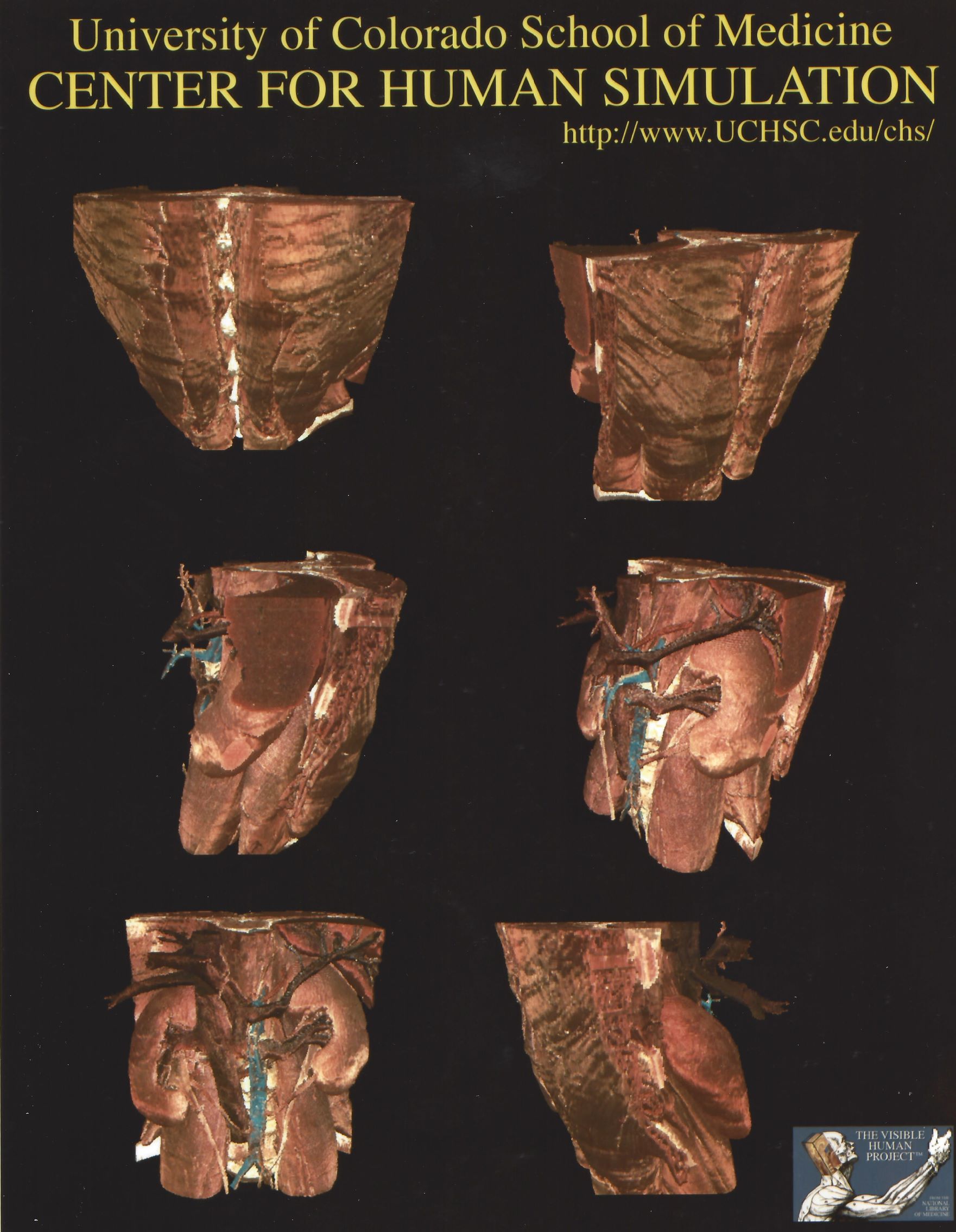

Visible Human Project - Anatomical Data Acquisition, Processing, and Utilization

How I got the job

I got this job after a 5 minute interview. My wife and I moved to Denver, Colorado from Macedonia, Ohio. Marlene had taken a new job with Leprino Foods, a cheese manufacturer. My plan was to go to to grad school. Initially I was looking at the Radiation Physics school at the University Hospital in Denver, Colorado. After talking with the folks there I decided Radiation Physics was not the job for me. At the end of my discussion with one of the professors he said "Hey, if you're looking for a part time job while you are in school, go see Vic Spitzer. He does computer stuff." I went to see Dr. Spitzer. He was on his way to an appointment so he had five minutes to talk with me. We chatted. He gave me a key and said "You're hired. I need that SUN4 to talk to that automated video to film camera."First day on the job

It was not too tough of a task. The camera had a standard RS-232 port. The SUN4 had a serial port that could do RS-232 and RS-485. The lab did not have an oscilloscope and I did not have any RS-232 breakout hardware. On my first day on the job I figured out that the only way to tell what the port was configured for was to check a jumper on the SUN4 processor card. I figured the lab folks would not want their system disassembled during the day so I left and came back after 10:00pm. The first time I saw Dr. Spitzer, Vic, after the interview was after midnight with his computer disassembled all over the lab floor. It didn't phase him a bit. Vic was and I imagine still is, a pretty cool dude. He reminded me of Doc in the Back to the Future movies.Overview of the Visible Human Project

This was a cool place to work. The lab had been selected to produce the Visible Human data set for the National Library of Medicine. The below video gives a great overview of the Visible Human Project.

This video shows a Celiac Plexus Block Simulator Dr. Reinig and I worked on.

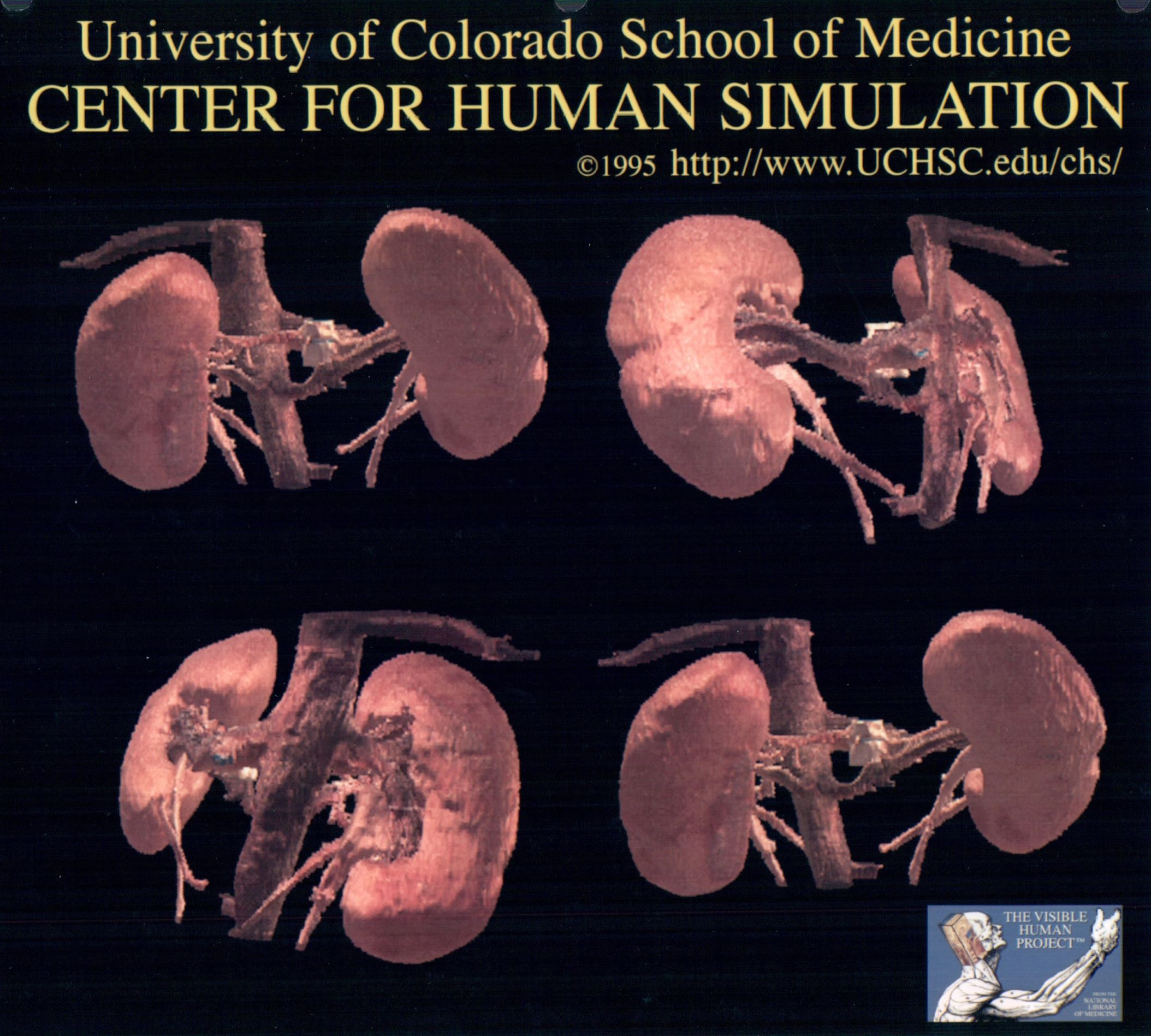

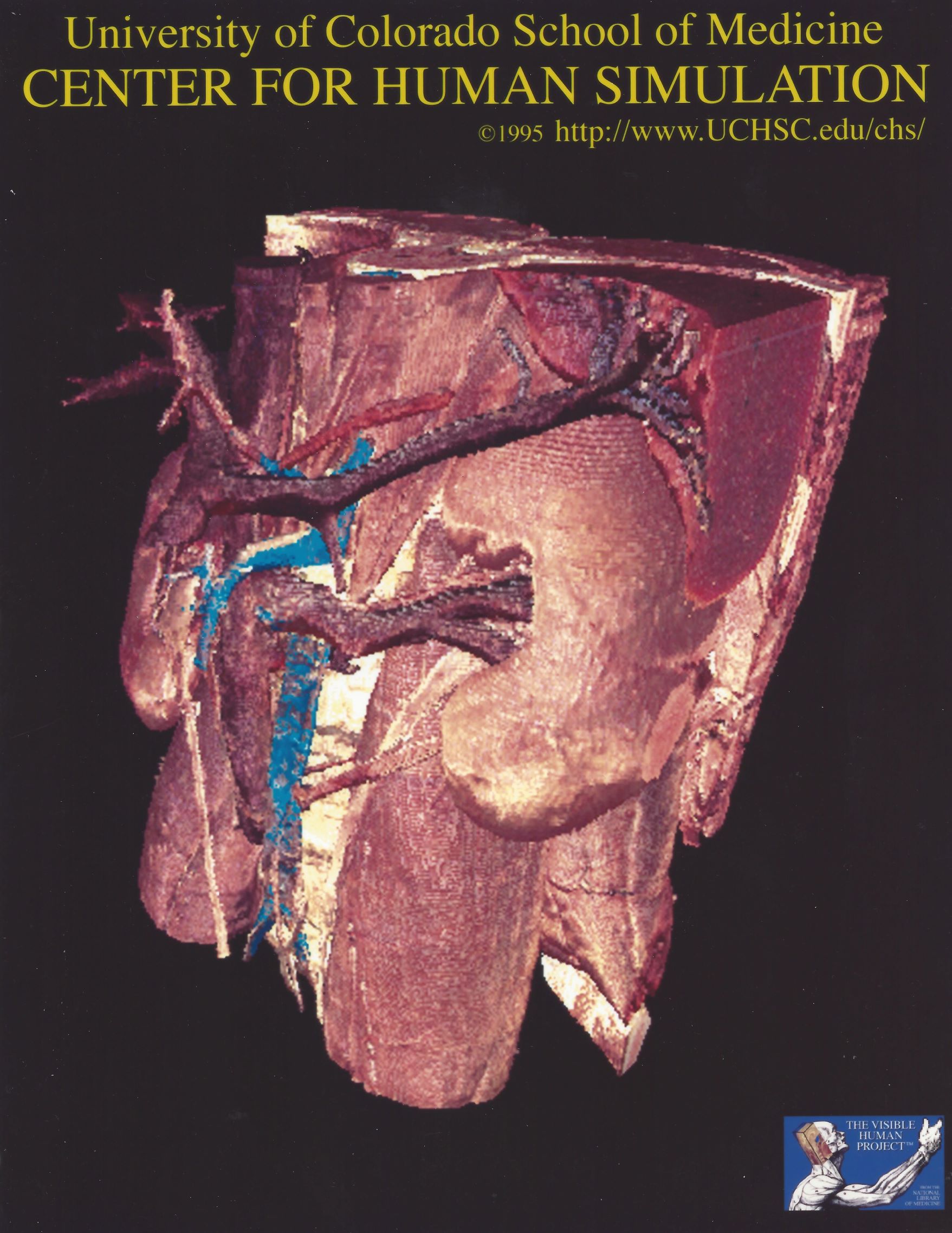

Cool work products produced by the team

What I did while working at the Anatomical Visualization Lab

After I had been working at the lab for a while, a guy named Dr. Karl Reinig wandered in and inquired about working at the lab. Dr. Reinig had his own computer graphic/visualization business he ran out of his house. Dr. Reinig was and I imagine still is a wizard, way smarter than the average bear. His presence at the lab supercharged us all. That guy could do math, visualization, and physical modeling code that makes ordinary folk's heads explode. I on the other hand am more of a get things done, production engineer kind of guy. Together we made a powerful team. I was content being his right hand man.

Design & Build Cryogenic Macrotome

The lab came up with the name Cryogenic Macrotome for the system that processed the cadaver sections. A Bridgeport mill was used to do grind off a millimeter at a time from the cadaver section. It was retrofitted with a numerical control system for the X and Z axis. I programmed the system to perform the slicing.

Three cameras were used to capture each slice, a video camera, a 42 bit digital color camera, and a film camera. The cameras were attached to mechanical slide system on the ceiling. A stepper motor was used to position the cameras during the image acquisition process. Each camera came with a remote to trigger the camera. I acquired a set of Opto-22 digital I/O modules. These were used to trigger image capture on each camera.

I wrote a program on a PC to control and coordinate the image acquisition process. That was quite an adventure. I used Borland C++ 3.0. Only problem was I had never done C++ and had only written one or two C programs ever. Previous programming experience was in PLM86. The code would not win any prizes in a coding contest. But, most importantly, the program got the job done and contributed to the success of the Visible Human project.

Edge Detection & Segmentation Tools

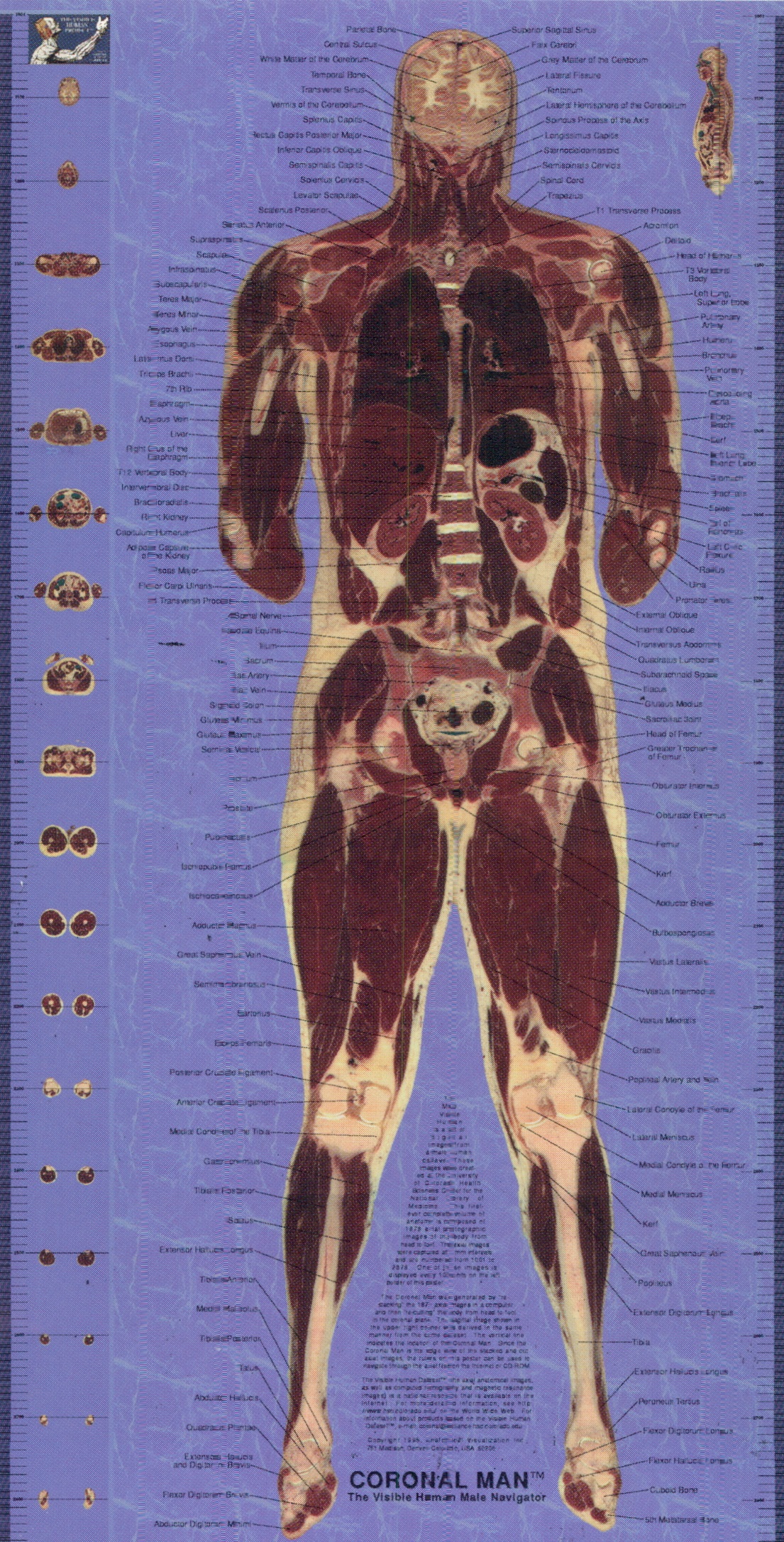

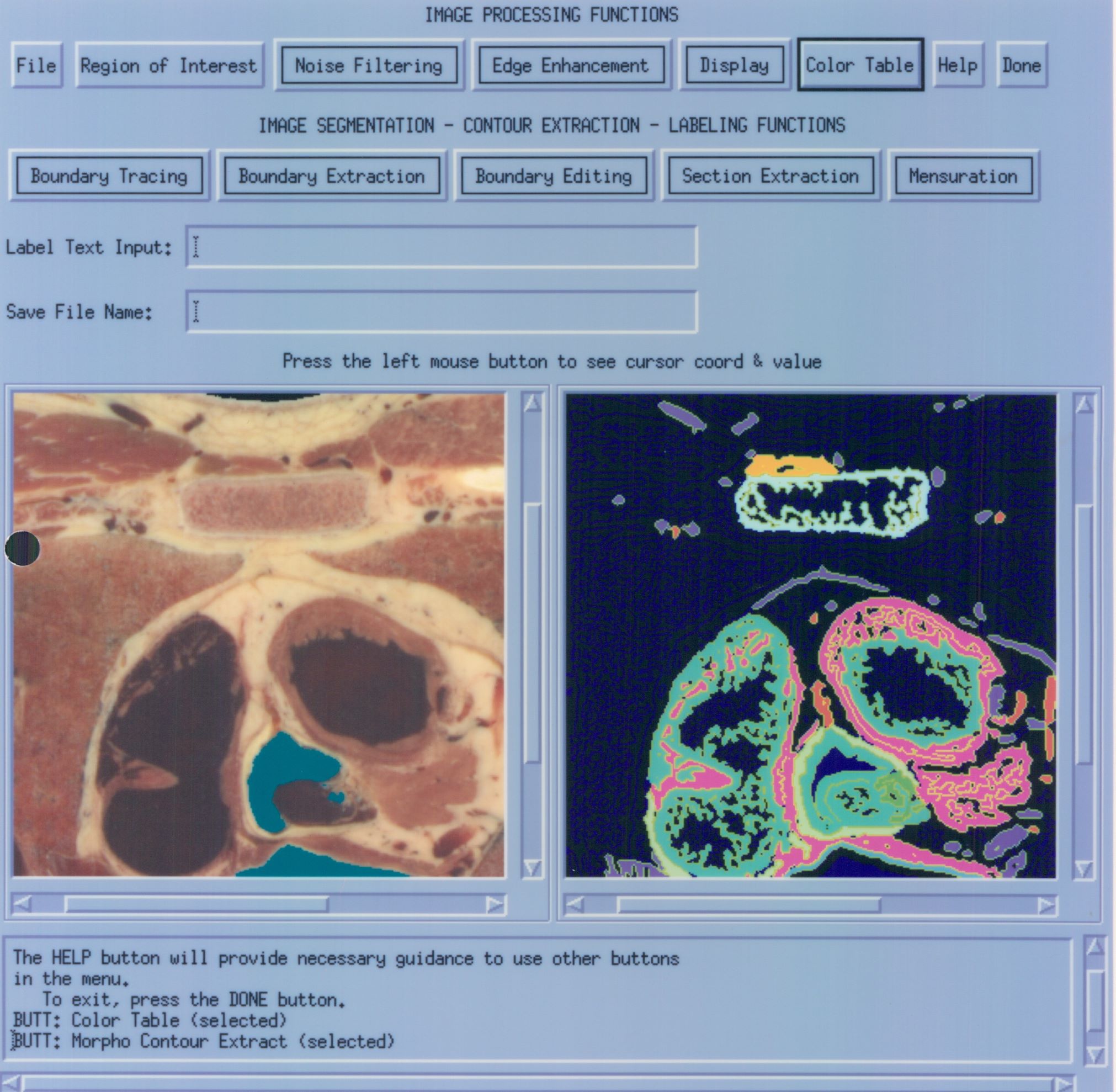

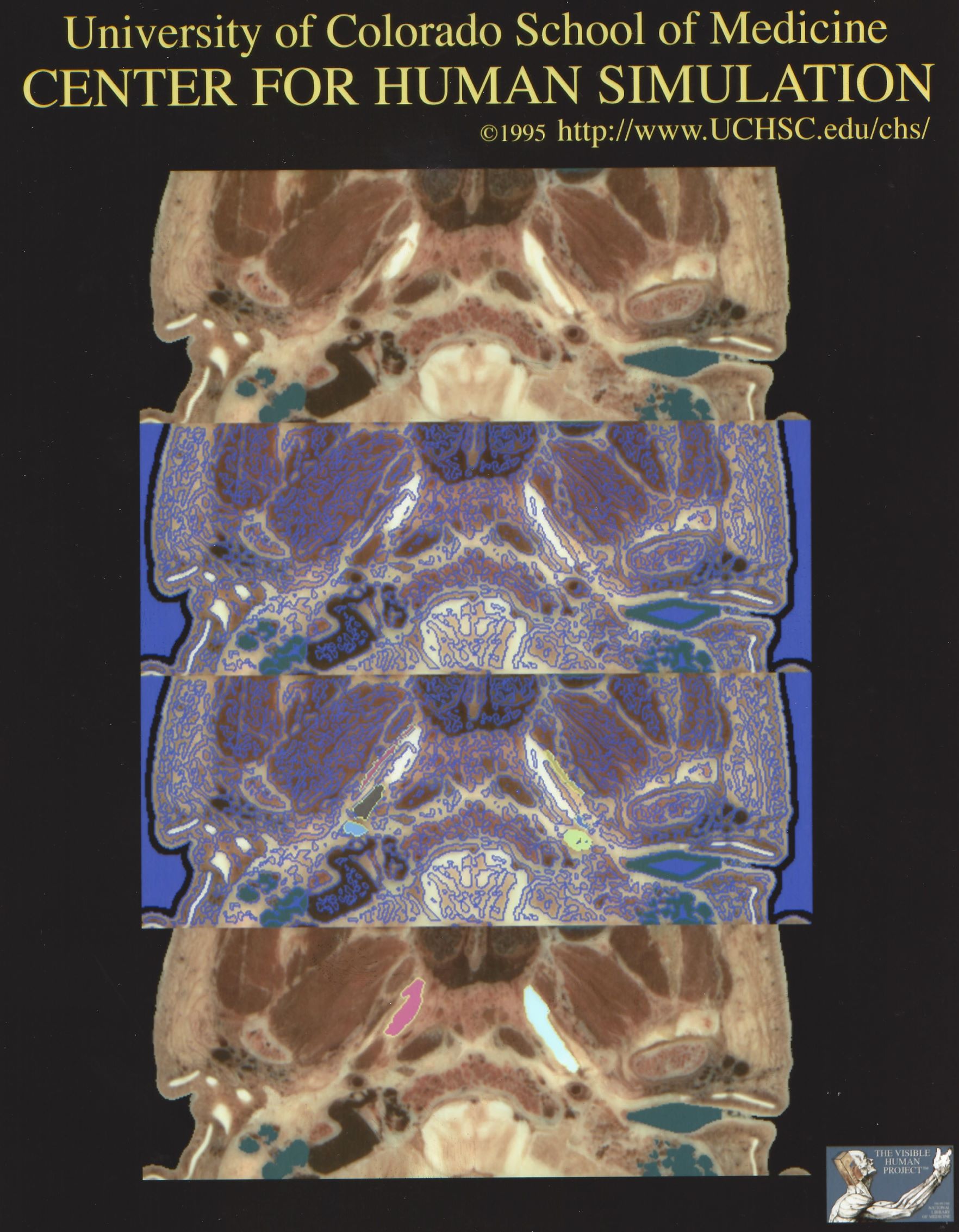

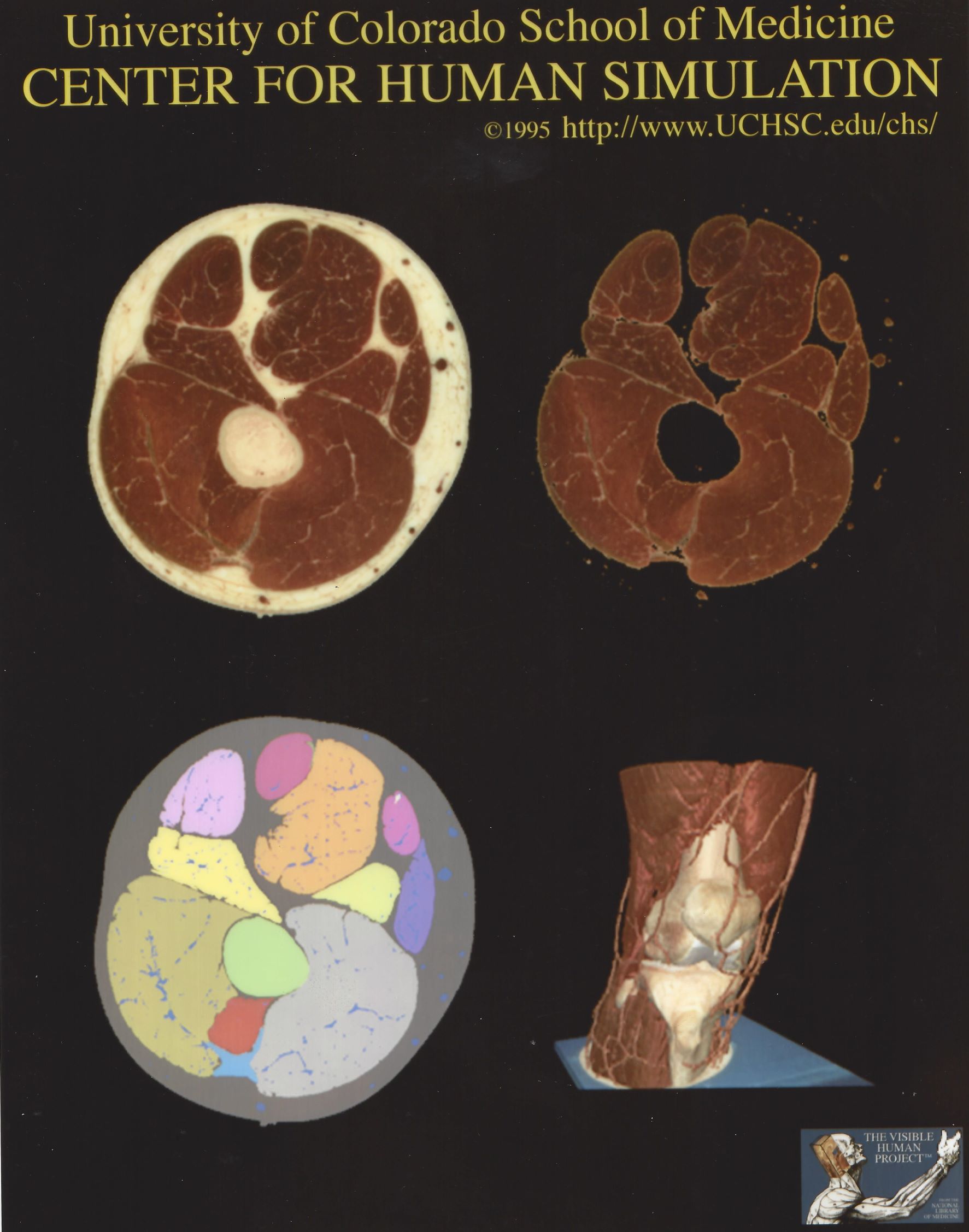

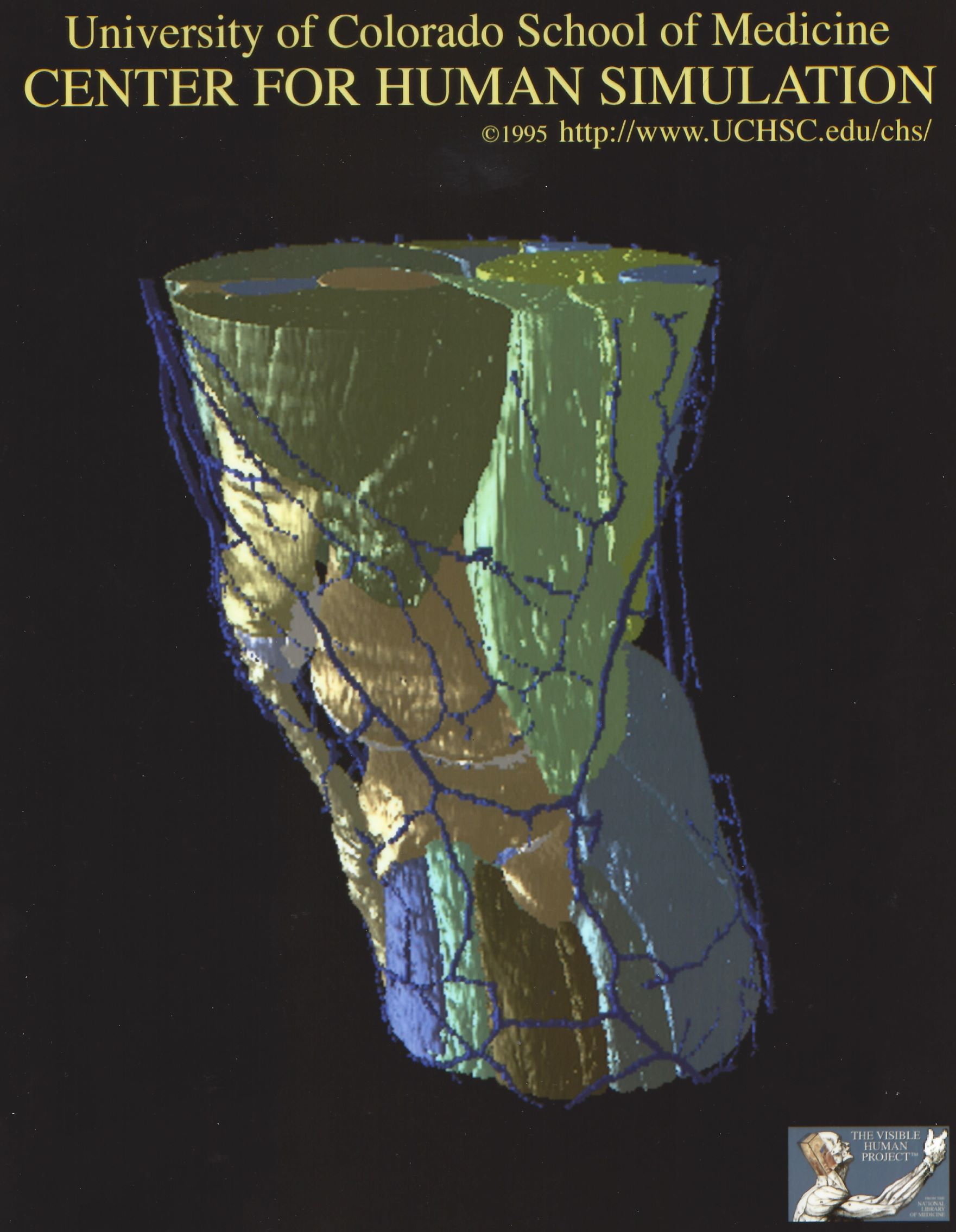

Dr. Reinig did some of that PhD magic and found us a 3D edge detection algorithm. I implemented it and we ran it on the anatomical data captured by the cryogenic macrotome. No edge detection algorithm works perfectly. It was necessary to correct the output from the edge detection algorithm. The first computer we used for image processing was a PIXAR computer. It was a system that you loaded the 3D data in to. The hardware was structured so you could access the data in different image planes. I wrote programs using their GUI package, RAP. For each slice the user was presented with image data overlaid with edge data. The user corrected the edge data. When a contiguous edge encapsulated an anatomical feature, the user would do a flood fill of the enclosed area. This flood filled edge/overlay then was saved as the mask image for that slice. In the above carousel see Helen Pesters MATLAB segmentation tool. Helen was a colleague. She wrote that tool after I left the lab. Also see the image with caption "Raw Image, Edge Detection, Feature Segmentation, Feature Masks"

Data Manipulation and Access Library

The Visible Human data set is huge. As we started using the data, we realized it would be nice to have a standard interface we could use to access the data. The library I wrote had interfaces to access data slices in all three planes, arbitrary 2D areas from a slice, or arbitrary 3D chunks from the data set. In addition, we sometimes had lighter weight applications that did not require full resolution data. We generated some reduced resolution data sets. One of them was an 8 bit indexed color data set.

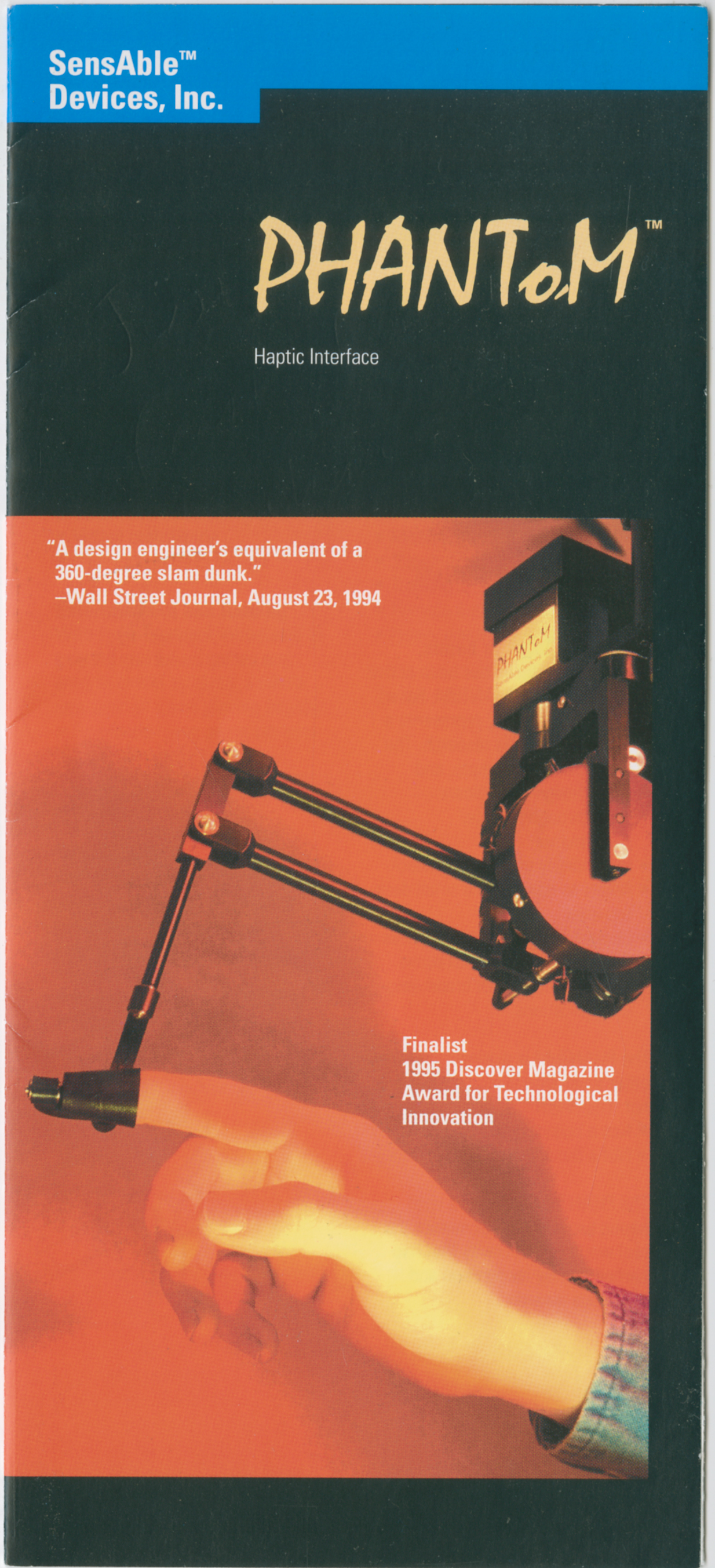

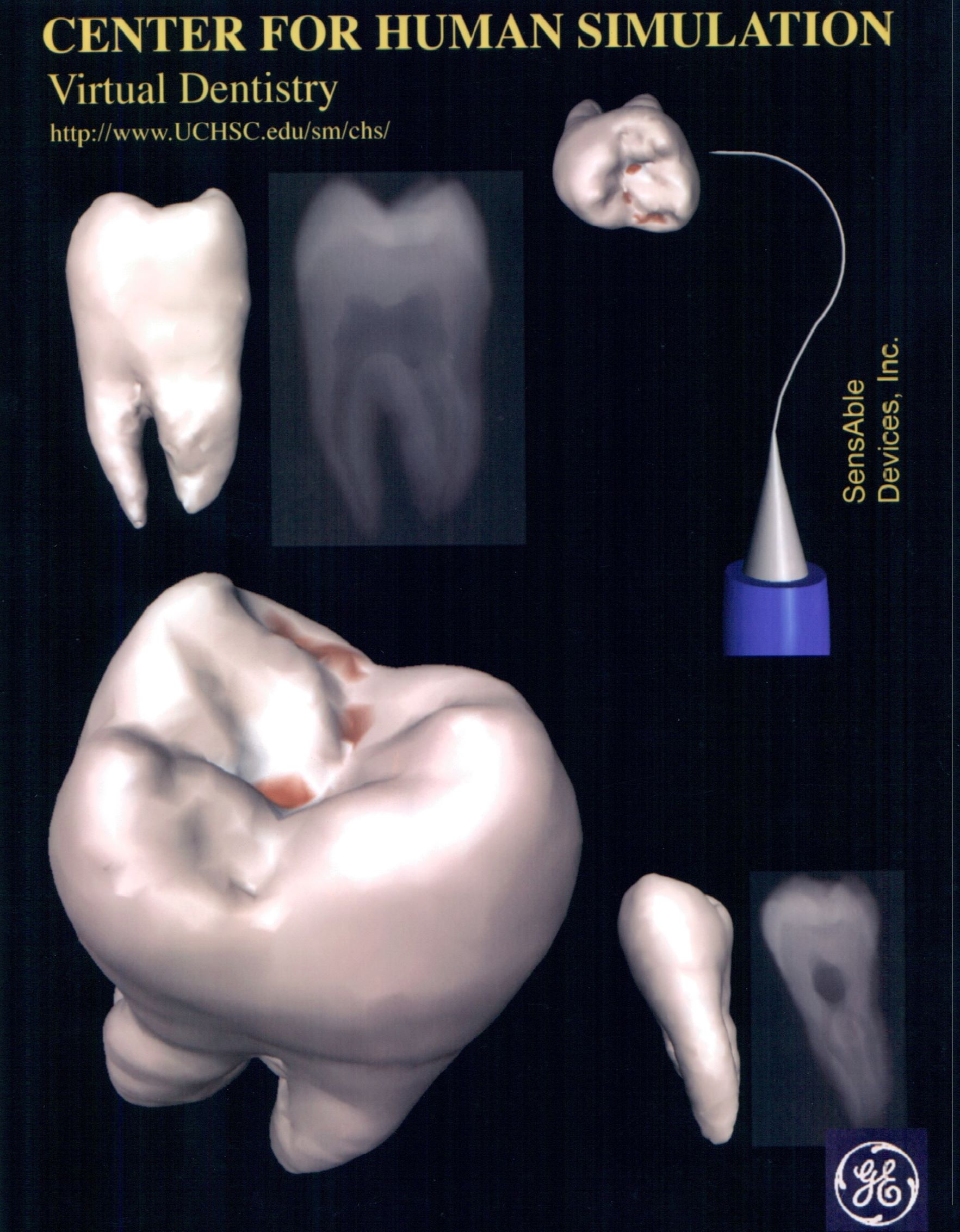

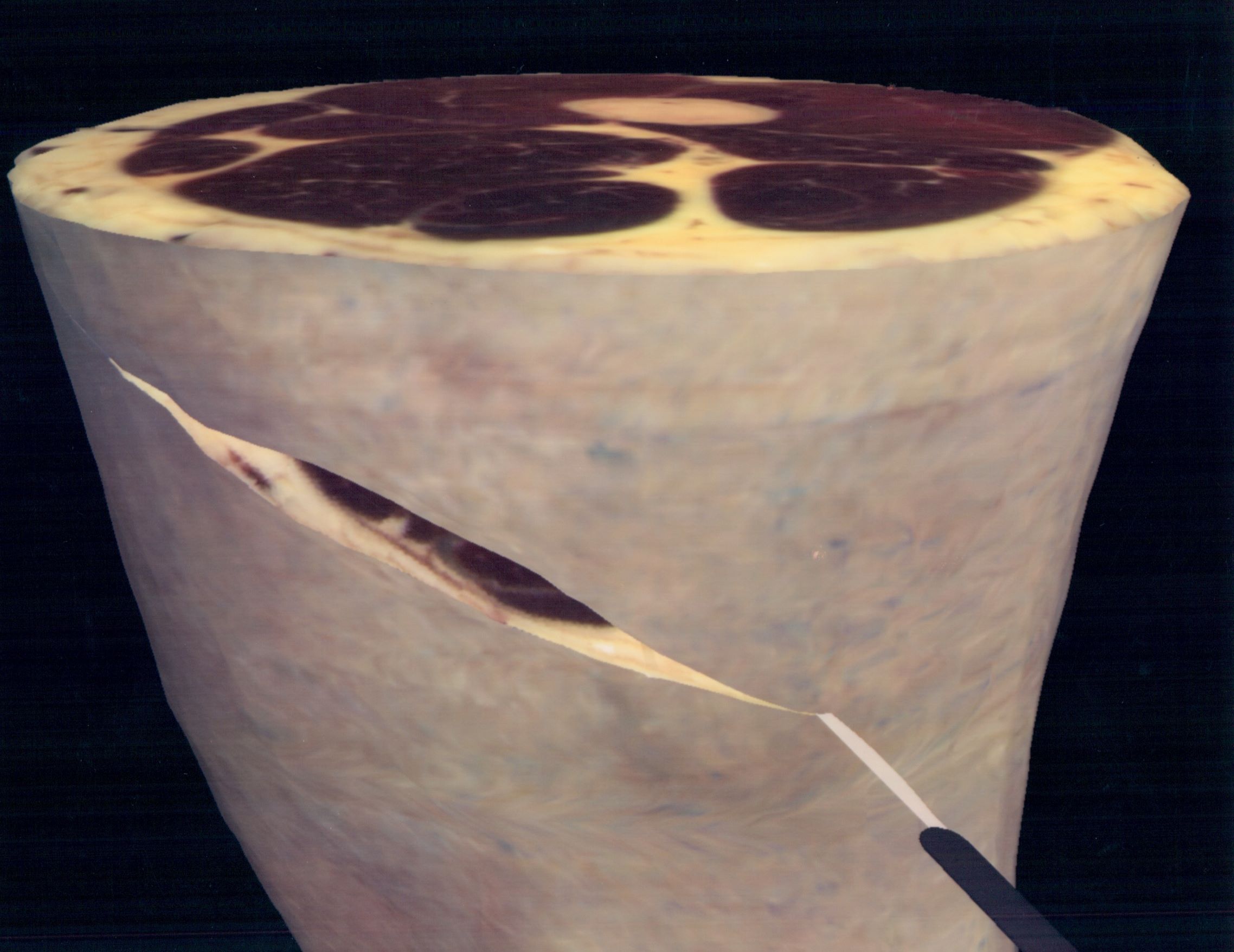

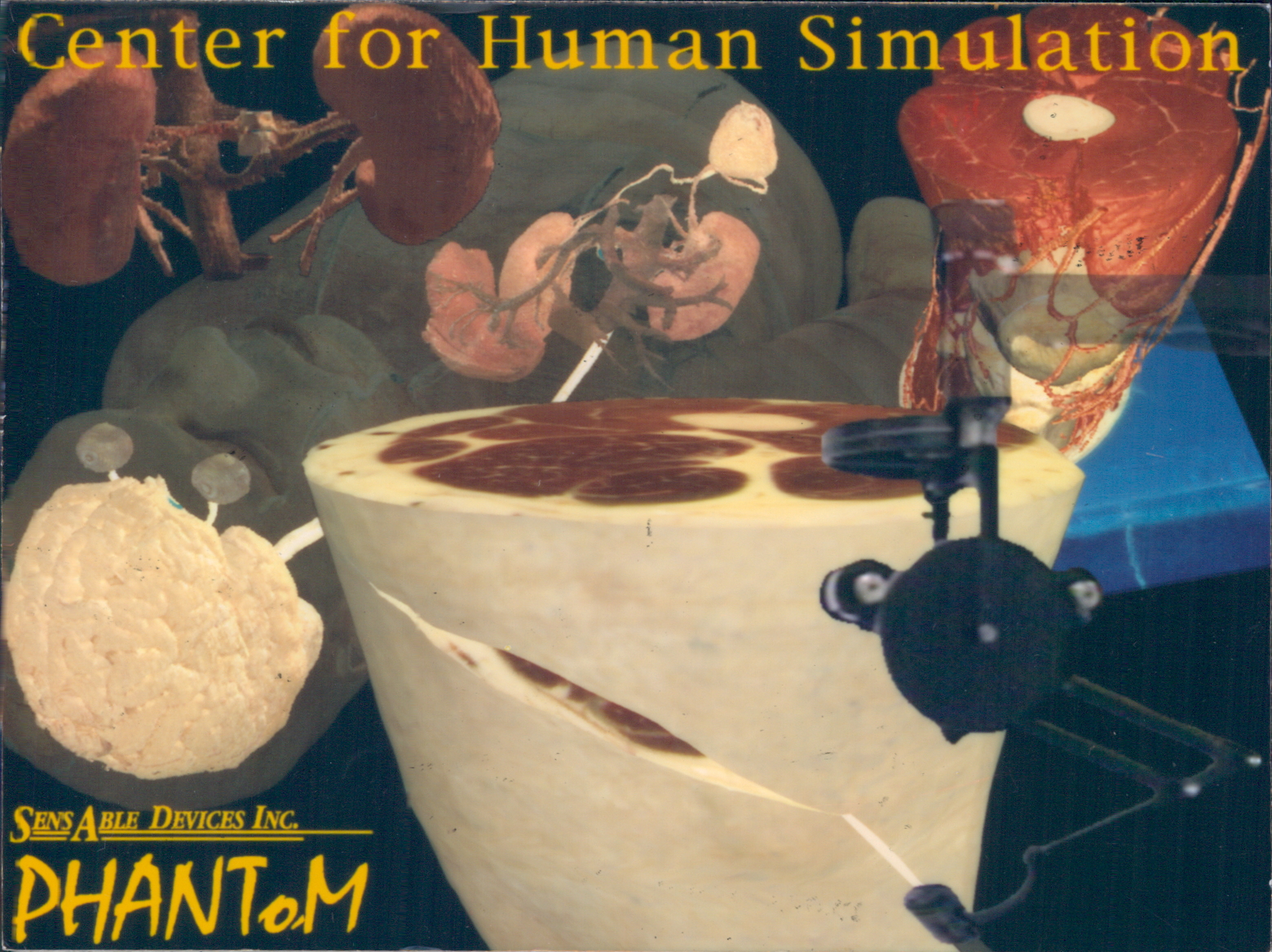

Celiac Plexus Block Simulator

Dr. Reinig and I were sitting in a Chinese restaurant eating lunch. Karl got "that look" on his face that told me were were going to have some fun. He said "Chuck, we should make a Celiac plexus block simulator." Being game for anything and not knowing what the heck a Celiac plexus block was I said "Cool, sign me up." We made a fiberglass model of the Visible Male's back. Inside the model we placed a Sensible Devices force feedback device. A needle was attached through the back to the force feedback device. We had a segmented 3D model of the Visible Male's thorax and abdomen which we virtually placed inside the physical model of the back. At any point, we knew where the needle was in the anatomy.

When doing a needle stick, the interventional radiologist uses fluoroscopic imaging to visualize placement of the needle. We acquired a foot switch similar to one used in a fluoroscopy lab. Since we had a complete CT scan of the Visible Male we were able to generate simulated fluoroscopic images to show the location of the needle in the anatomy. We could also show the anatomical data so the trainee could visualize the anatomy the needle was traveling through. The port driver for the force feedback device was my first exposure to a device driver in Windows. I did all the work described in this paragraph. It was cool, but Dr. Reinig's contribution was even cooler.

Dr. Reining did the force feedback modeling code. When you entered a muscle in the virtual back, the slight pop was transmitted to the needle. The extra force needed to slide through the muscle was also transmitted by the force feedback device. If the needle hit a bone, the force feedback model would not let the needle move. Like I said, Karl was smarter than the average bear.

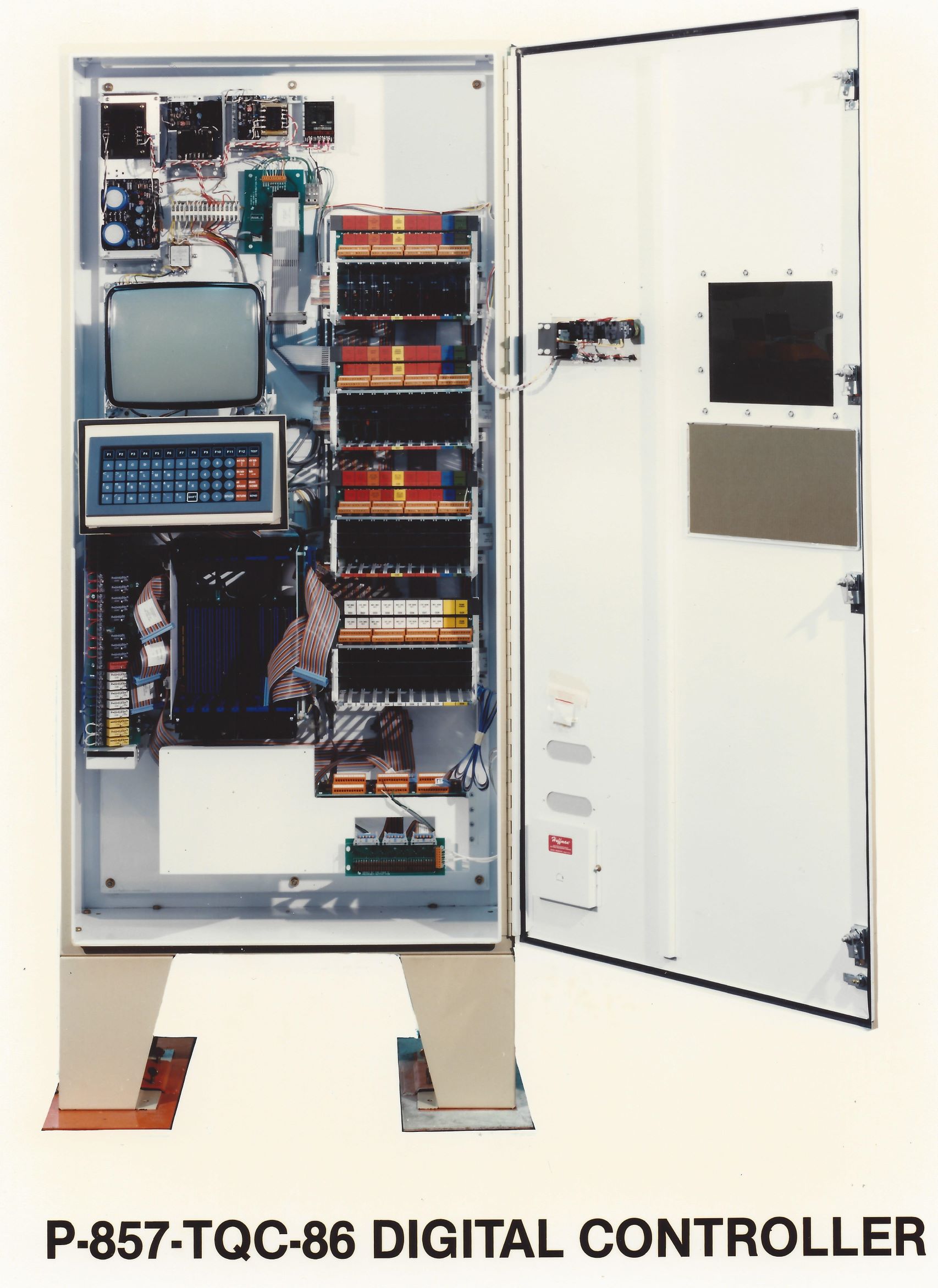

Akron Standard - Tire Test Machines

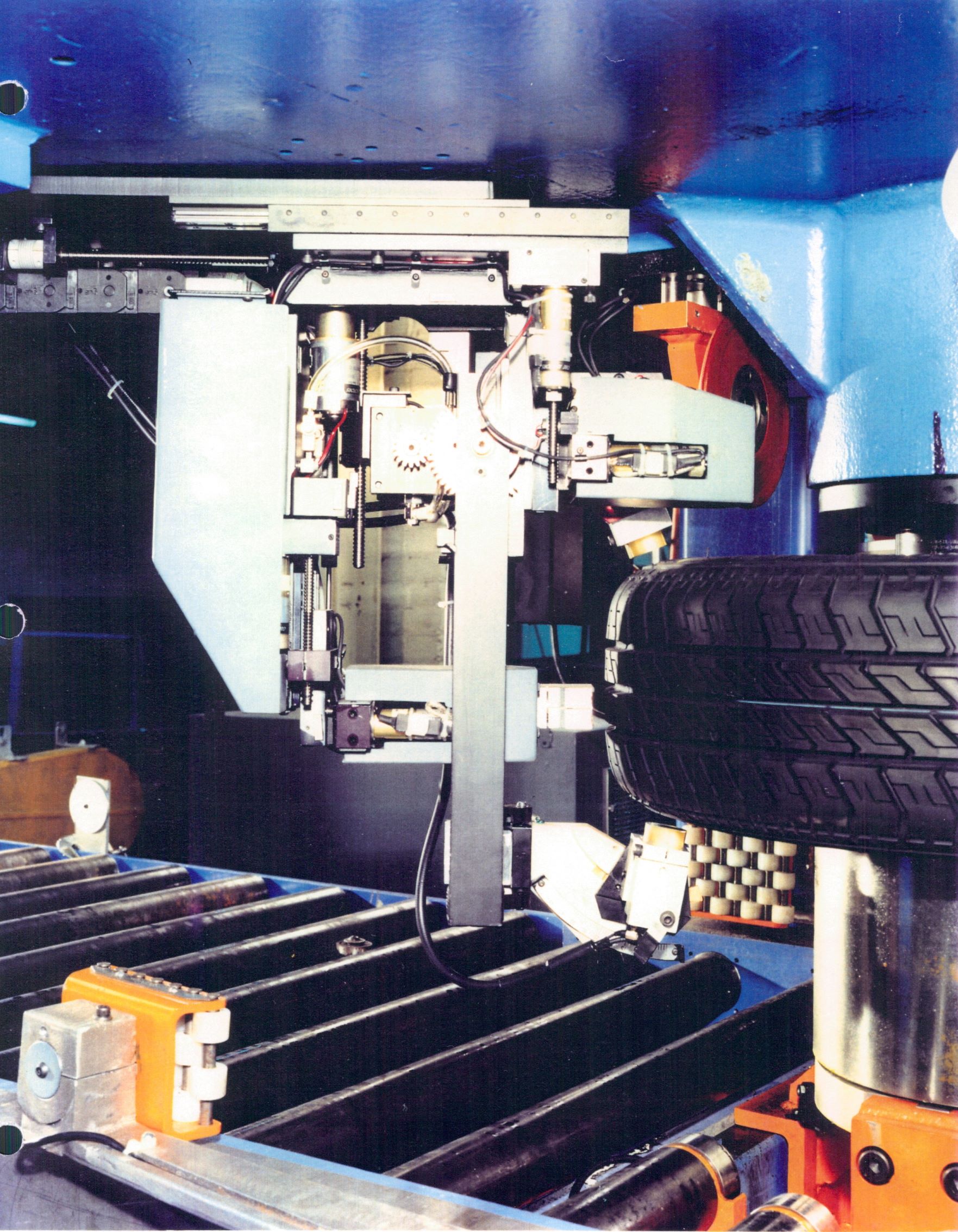

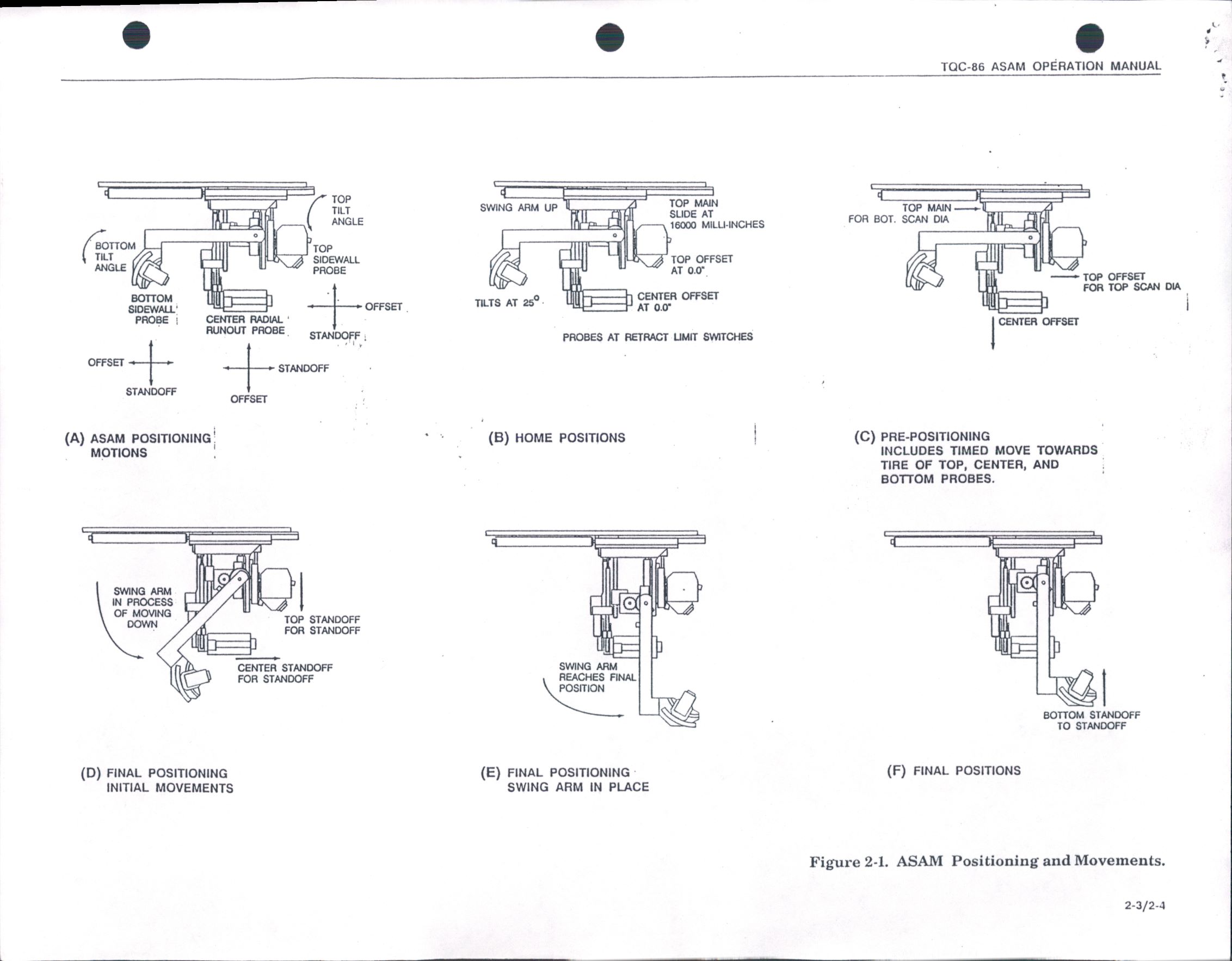

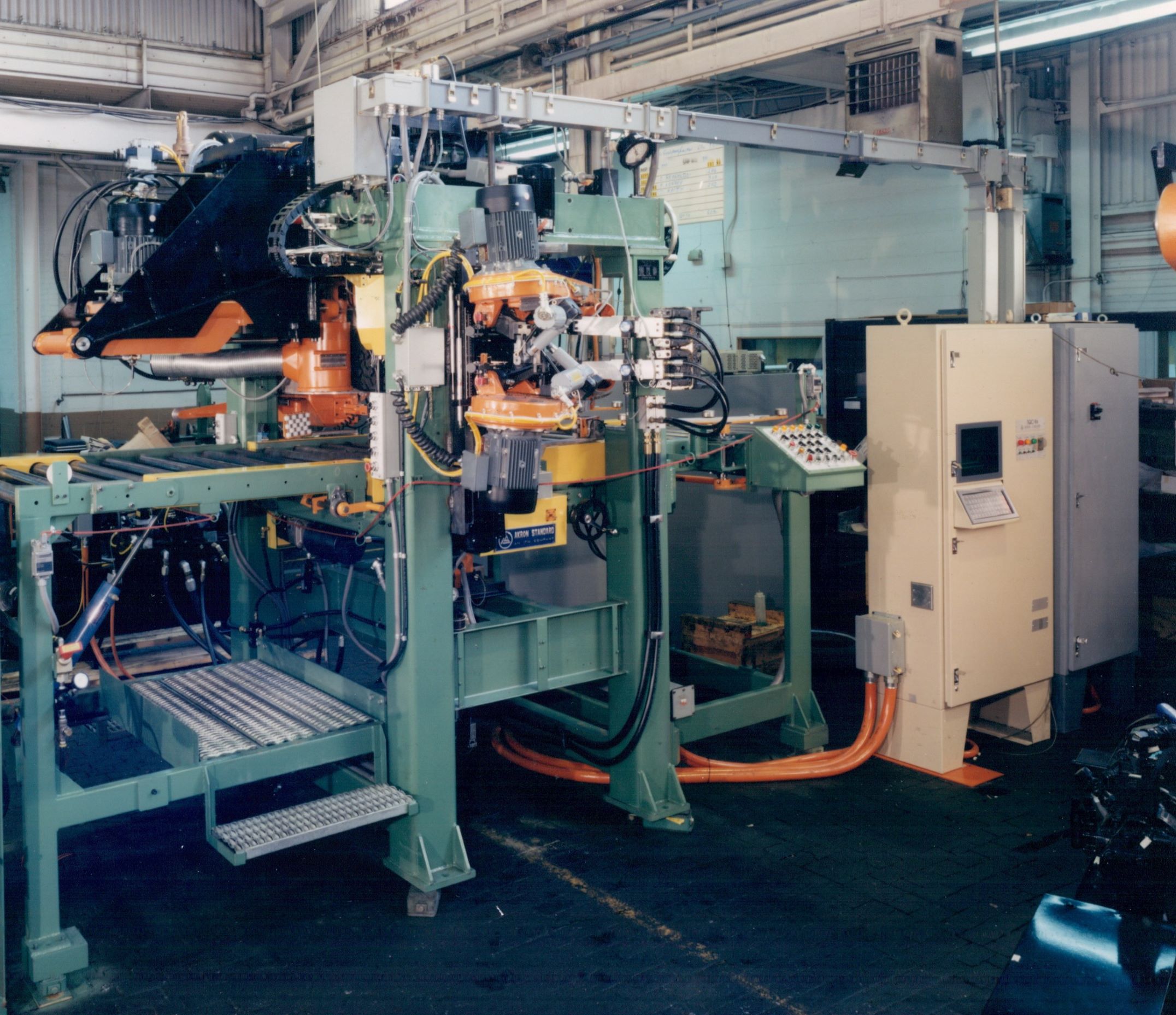

The main product line at that time was the Tire Uniformity Optimizer (TUO). The TUO measured radial force variation, radial runout, lateral runout. With the addition of sidewall appearance probes, the user could also monitor for sidewall bulges.Before I started at Akron Standard the TUOs were set up to run a particular tire size. When a manufacturer wanted to measure a different size tire the machine was shut down while the mechanics, using wrenches and other tools, adjusted the machine for the new tire size. The system I worked on was called a flexible TUO. An in-plant tracking system would tell the TUO the characteristics of the next tire to be tested. When the current test was finished and the tire was being dismounted and sent on its way, the TUO was already adjusting itself as much as possible for the next tire size.

I was lucky enough to be the developer for the Automatic Sidewall Appearance Monitor (ASAM).

I also did work on light truck machines. As well as the D90, a machine for testing truck tires. That thing was huge.

Firestone Tire - Tire Test Machines

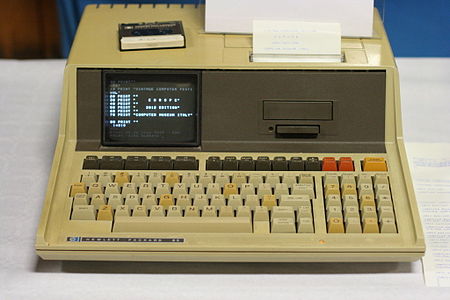

This was a one year contract job, my first post-college job. Wrote code to control two different tire test machines.Program was written on an HP-85 computer very similar to the one below. Language was BASIC. System had a GPIB interface to the tire test machine.

One of the tire test machines was similar to the one pictured below..

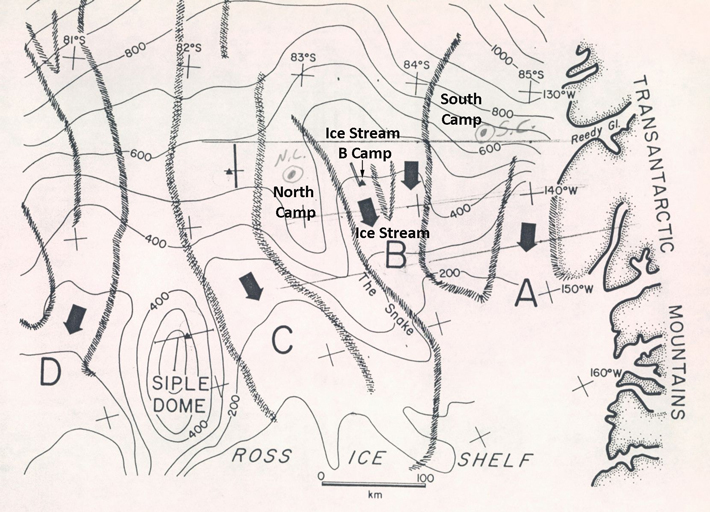

Antarctica - Data Acquisition - College Job

High Cool Factor on this one. One of my bucket list items is to walk on all seven continents. Antarctica was my fifth. I worked with Dr. David Bromwich at the Ohio State University Institute for Polar Studies (Now called Byrd Polar Research Center). I did programming for him to analyze meteorological data for about a year. Then I applied for the trip to Antarctica with Dr. Ian Whillans. Before the trip I built a set of cables to hook solar panels to the Transit satellite receivers used to measure locations. Transit was a precursor to GPS developed in the late 50s and was used until 1991. During the expedition my duties were performing distance measurements on pre-existing survey grids, gathering weather data, drilling ice cores, and placing satellite receivers at various locations to measure ice stream flow.

Getting there and getting around.

Columbus, Ohio to Christchurch, New Zealand

Columbus, Ohio to Christchurch, New Zealand

We flew commercial airlines from Columbus to Christchurch. (Not this particular aircraft but one like it).

I don't remember what aircraft we flew from Christchurch to McMurdo. It was either a C-141 or a C-130. I remember asking one of the air crew why we did not have any survival training in case the plane crashed in the ocean. His answer was 'The water is cold. you'll be dead before rescuers arrive.' It wasn't a comfortable or luxurious ride. We took a C-130 from McMurdo Station to Ice Stream B.

Once we got to Ice Stream B, a C-130 came every couple of weeks to bring food, fuel, and most importantly, mail from my girlfriend. This is the final part of the landing sequence. Before landing the C-130 would fly over the ice and drag its skis along the ice. Then it would ascend and fly over where it had dragged the skis and look for any crevasses that may have been uncovered. If the landing area looked safe, then and only then would it land.

To get to our remote camps, North Camp, and South Camp, we flew in a Twin Otter aircraft. The Twin Otter also transported us to far away points on the Ice stream B survey grid. The aircrew were from Canada, one pilot and one mechanic. They were a fun bunch.

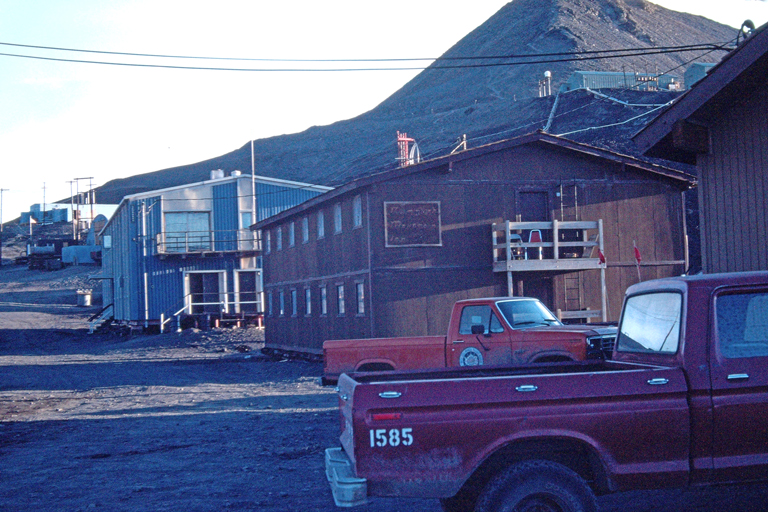

McMurdo Station

Overview of McMurdo Station

Overview of McMurdo Station

I spent my 1985 Thanksgiving at McMurdo Station. The food was excellent. In addition to turkey, we had lobster and shrimp, pretty much all you could eat. The mess hall was great.

Survival School

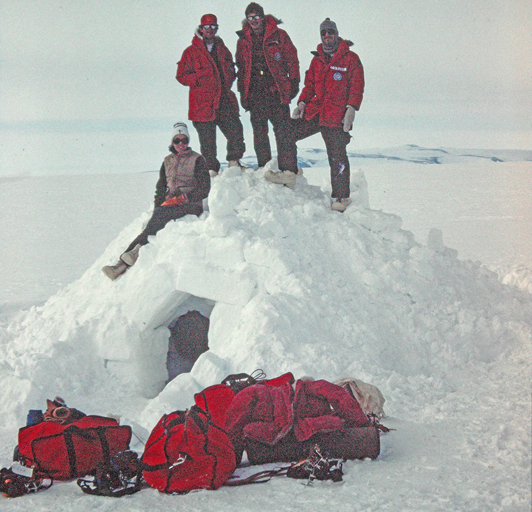

There were four newbies on the Ohio State crew. Attendance at a two day survival school was mandatory. I remember three main topics that were covered, building shelter, rappelling, and how to stop yourself from sliding down a steep incline or a crevasse.

We were required to build a shelter and spend the night in it. It was a lot of work. Thankfully, there were four of us so it wasn't too bad. It was a bit crowded in the shelter though.

Building a place to sleep - Step 1

Building a place to sleep - Step 1

Stack all your gear in a pile.

Building a place to sleep - Step 2

Building a place to sleep - Step 2

Pile lots of snow on top of your gear.

Building a place to sleep - Step 3.

Building a place to sleep - Step 3.

Burrow in to the snow mound and remove your gear. Then hollow out the inside of the mound until you have enough room for your crew. It was not spacious. My most vivid memory of that night was snow occasionally falling on my face.

The Ohio State crew, left to right. Kelly Beatley, Rob Mellors, Kees Van der Veen, and Chuck Rush.

We also learned how to stop ourselves if we fell and were sliding down a steep incline. It involved using an ice axe to stop yourself from sliding. It was easy to do in a training situation but I worried that in a real life situation I would be just as likely to stab myself in the chest with the ice axe.

We also did some rappelling. If you were to fall in to a crevasse, rescuers could toss down a rope and you could rappel to safety.

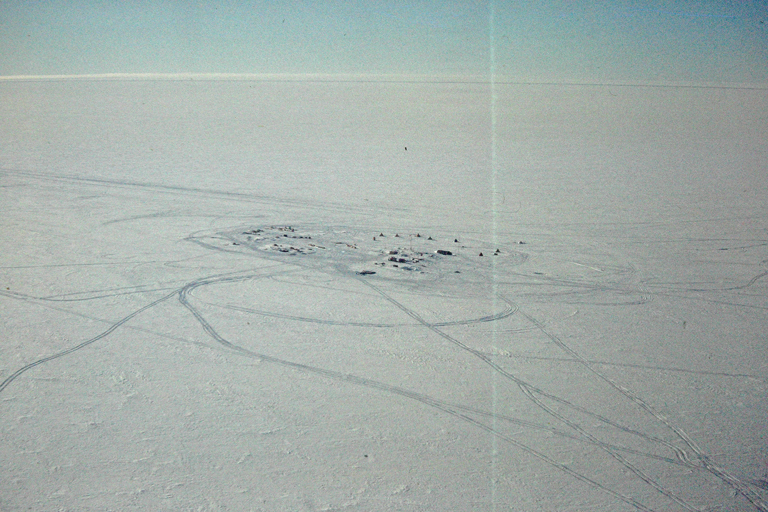

Main Camp - Ice Stream B

We slept in tents in sleeping bags on plywood. Two people per tent You can see the latrine at the left hand side of the picture.

This link has a nice description of the design principles of the Scott Tent.

One thing that took some getting used to was the 24 hour sunlight. The sun was out at bed time and the sun was out when you woke up.

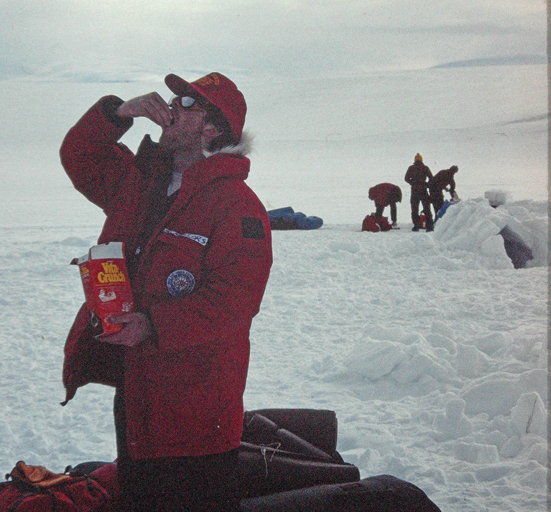

We ate really well at Ice Stream B. The cook was a chef from a fancy big city hotel. In Antarctica, the cost of the food is the smallest part of the cost of the food so the expedition bought high quality food. I liked eating at Ice stream B. I spent most of my time at the remote camps so my partner and I had to cook for ourselves.

At Ice Stream B there were two large Jamesway huts, our tents, and the latrine. One Jamesway hut was the dining hall. The other Jamesway was for the team's equipment and a place to gather in the evenings to work and socialize. I have nice memories of Andrea Donnellan playing her guitar and singing.

The Remote Camps - North Camp & South Camp

The transit satellite receivers we used for geolocation of the survey grids were much less accurate than modern day GPS receivers. To get better accuracy, for the duration of the expedition, a stationary satellite receiver was positioned at each remote camp. The primary mission of the folks at the remote camps was to ensure the satellite receiver was running at all times. The only other required task was to periodically gather temperature, wind speed, and wind direction data.

The Science

There was a large survey grid close to and directly south of Ice Stream B. Kelly Beatley and I spent a number of days surveying that grid. We would place a reflector on one pole, drive to adjacent poles, and measure the distance with a laser range finder.

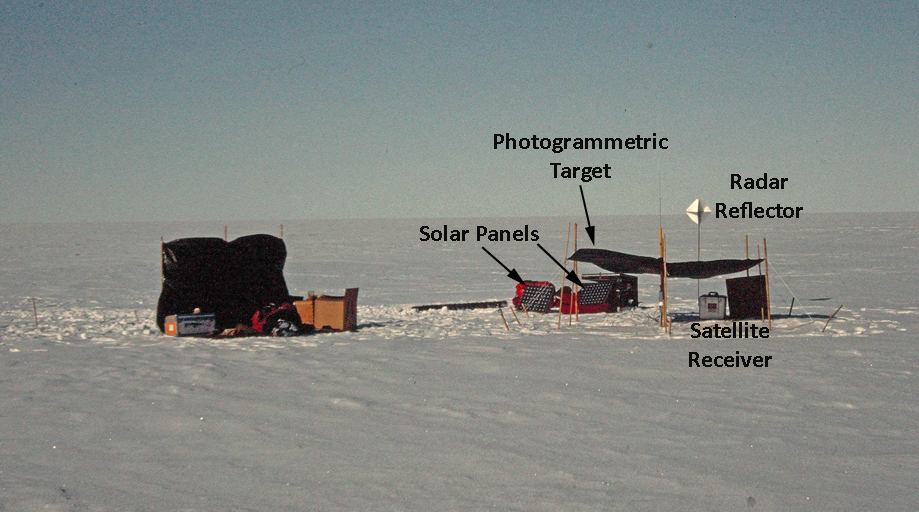

There were designated survey sites located all over the ice stream. A crew of two would be flown to the sites. At each site they would deploy the satellite receiver, put up a photogrametric target, and drill an ice core. As I recall, the satellite receiver was left at the site for a number of days. The ice core was preserved and eventually transported back to Ohio State for analysis.

After we returned home, a C-130 flew over the ice stream and photographed the entire ice stream. I believe the motion of the ice stream was analyzed using both the position data from the satellite receivers and from an analysis of the photogrametric data.

The crews at Ice Stream B, South Camp. and North Camp periodically collected temperature, wind speed, and wind direction data. Doctor David Bromwich, another researcher at the the Ohio Staue Institute of Polar Studies used this data in a paper about surface winds in West Antarctica.

I mentioned above that there was not a lot to do at the remote camps. At South Camp, Mike Strobel and I got bored so we dug a snow trench. It is relatively low tech science. You dig a trench and then record the snow layers revealed in the trench sides.

The People

Dr. Ian Whillans was the Principal Investigator for the expedition.

Andrea Donnellan, Mike Strobel, Kees Van der Veen, and Patricia Vornberger were graduate students at the Institute of Polar Studies.

Kelley Beatley, Rob Mellors, and me, Chuck Rush, were undergraduate research assistants on the project.

Left to right: Kees Van der Veen and Dr. Ian Whillans.

Dr. Ian Whillans gave me a great piece of advice for doing effective presentations. "Tell them what you are going to tell them. Tell them. Then tell them what you told them." It works! Thanks Ian.

Army Reserve at the South Pole

Army Reserve at the South Pole

At the time of the expedition, November 1985 to February, 1986, my uncle was a General in the Army Reserve. While attending orientation for the expedition in Washington D.C., Kelly Beatley and I visited him at the Pentagon. He gave us an Army Reserve banner to take to Antarctica.

Solar Pond - Data Acquisition - College Job

I realize it is not customary to include college jobs after you have been working for a while. However,

solar pond technology is kind of cool so I decided to include it.

I worked there for a couple of years as a research assistant.

One of my jobs was to build and program a multi-channel data acquisition system controlled by an HP

calculator.

Sample

Output